It’s 2024: everybody’s in the cloud. Using on-demand computing—be it storage, servers, or services—all accessed over the internet.

We’re constantly making more data, and storing most of it online (sometimes in duplicate or triplicate). More and more of the daily services we use, like AI, are provided through the cloud.

More than 98% of organizations use the cloud now (and 2.3 billion plus individuals just for storage), with companies indicating they’re dedicating 80% of total IT budgets this year to cloud costs alone.

And since it’s innovation, one of the main draws for a move to the cloud has been its efficiency. With pay-per-use potential, uneven demand can more easily be managed, resources pooled and maximized, redundancies removed.

[For assistance from top cloud computing experts, take a look at what PTP has to offer!]

The data centers providing these cloud computing services can limit downtime, develop customized cooling, maximize automation, and utilize state-of-the-art security. They can promise a lower per-unit energy consumption because of sheer scale.

It’s all these benefits that have seen the hyperscalers, or the very largest cloud providers, flourish. Just three cloud providers account for 2/3 of all rentable cloud computing, with two of them owning more half the market share (Amazon’s AWS 32% and Microsoft’s Azure 23%).

Along with Google (10%), these hyperscalers dominate public cloud computing, and now are also at the forefront of offering emerging AI technologies.

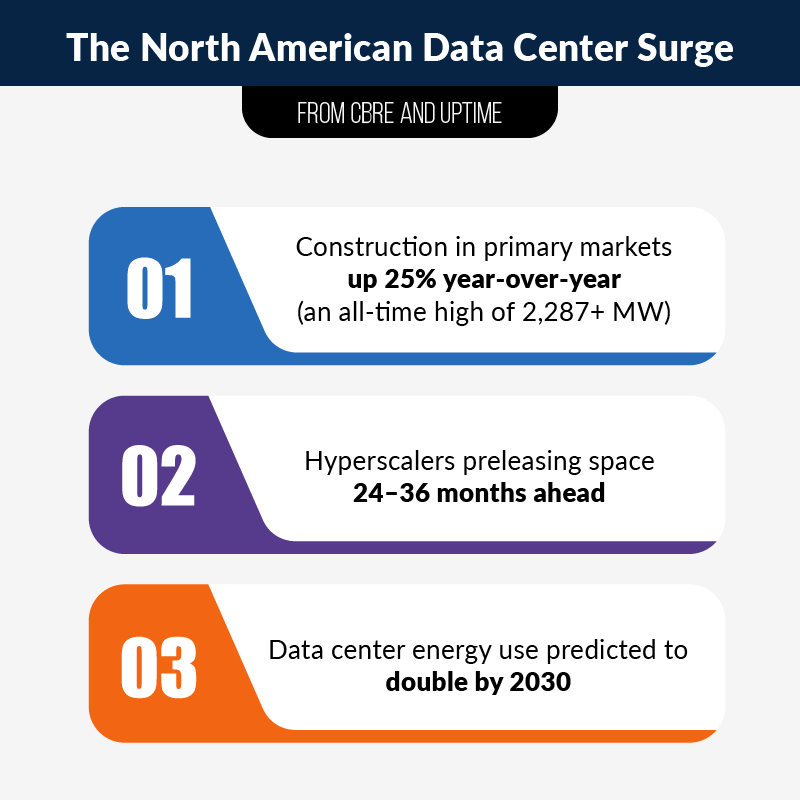

And with the hunger for cloud services outstripping availability, vacancy in data centers is the lowest it’s been in a decade across all major US markets. In short: data centers to provide cloud computing cannot be built, powered, and equipped for AI fast enough to meet the demand.

Countless statistics show cloud solutions as potentially far more sustainable than on-premises options, but with all the unmet data center demand comes more competition for power and space, urgency in construction, and less selectivity, making net-zero sustainability goals long promised by cloud providers harder (and more expensive) to reach.

All at a time when organizations face ever more pressure to be sustainable.

With this increased competition for resources, more regulation, and tighter reporting, a sustainability reckoning is coming according to Uptime:

“In August 2023, for example, the UN-backed Science Based Targets initiative (SBTi) removed Amazon’s operations (including Amazon Web Services) from its list of committed companies because Amazon had failed to validate its net-zero emissions target.

The CDP, previously known as the Carbon Disclosure Project and the most comprehensive global registry of corporate carbon emission commitments, reported that of the 19,000 companies with registered plans on its platform, only 81 plans were credible.”

In this week’s PTP Report, we take a look at this conundrum around the environmental impact of cloud computing: sustainability in the age of AI. How do we get to green cloud computing, with more green data centers, using the most sustainable cloud practices?

How Green Is It Now, Really?

Data centers are energy-intensive by nature, requiring not only power to run, but also to cool their vast arrays of equipment.

According to the US Department of Energy and the Climate Neutral Group, data centers that provide our cloud computing currently use as much as 3% of the world’s electricity and contribute 2% of the global CO2 output, or nearly as much as the entire, global airline industry.

The three biggest providers, the hyperscalers (AWS, Azure, Google), have dedicated themselves publicly to providing data centers that run on sustainable energy, but so far none have managed to remove fossil fuel use entirely. The result is a significant carbon footprint, contributing additional output in an area computing aims to mitigate.

In practice, many cloud providers utilize Renewable Energy Credits (REC) to reach their goals, which represent green energy generation. By amassing enough RECs, they can claim to be fueled by renewable power, even if data centers themselves remain on the traditional grid, and are increasingly built closer and closer to traditional power sources.

AI and Sustainability in the Cloud

There’s no doubt that AI and machine learning technologies are part of this equation, on both sides.

An example by Equinix shows how AI can directly improve data center efficiency, by modifying cooling to adjust energy use for changes in the exterior weather. At a data center in Frankfurt, AI enabled them to make an existing data center 9% more efficient.

But while AI benefits have the potential to optimize operations, reduce waste, and enhance energy efficiency, they are also (currently) notoriously energy-intensive: their computational power demands translate into energy demands. In one example from our PTP bi-monthly AI Roundup, a GPT-3 used only for drug trials used as much power as 120 homes in the US for an entire year.

As AI is increasingly integrated into cloud services, the energy consumption associated with developing and running these models will add a significant burden, potentially offsetting gains made elsewhere, at least initially.

But in the very near term, it may be the limited availability of chips that does more to throttle AI energy demands. AI cloud use that’s centered at established, massive hyperscaler data centers can moderate the environmental impact, but this area will most certainly be an issue to keep an eye on in the years to come.

Making Your Own Cloud Greener

Even if your cloud provider isn’t currently as sustainable as hoped, repatriating isn’t going to be possible and/or cleaner, and AI demands may make the challenge harder, there are still sustainable cloud solutions that organizations can do to be proactive and take on the problem.

-

Cloud Provider Sustainability

The most obvious solution is to find and use providers with the most energy-efficient data centers. This impact may also be the most significant on the list, but it’s obviously not as easy as it sounds.

Lock-in, where a cloud provider makes it very difficult and expensive to move services to another provider, has been an increasing concern for cloud users in recent years.

It’s also drawn the attention of regulators, both generally, as with the European Data Act which came into effect this January, as well as specifically, as in UK antitrust probe by the Competition Markets Authority (CMA).

Much of the latter is focused on egress fees, wherein hyperscale providers charge fees (or employ other means of throttling data movement) that make moving data or services out to other providers far more difficult.

The good news is that this may have already had an impact: Google (as the smallest hyperscale provider) led the way in declaring it would do away with egress fees, and just this month, Amazon’s followed suit, opening the door for more organizations to embrace a multi-cloud approach.

(For more on this or even the growing interest in repatriation, check out our PTP article on cloud trends for 2024.)

This freedom to mix and match gives companies far more power to put their money where their stated ideals are. By 2025, Gartner predicts carbon emissions will be one of the three most important determiners for customers purchasing cloud services, and not all cloud providers are created equal when it comes to sustainability.

Of the three hyperscalers, it’s probably no surprise the one with the smallest market share now, Google, has the most valid claim to sustainability. Google calls itself the “largest corporate buyer of renewable energy in the world,” by making a major investment in renewable energy and carbon neutrality.

Google’s matched 100% of its electricity consumption with renewable energy since 2017, and makes extensive use of machine learning for optimization, allowing Google to make the most viable claim to sustainability among the biggest providers.

A company’s cloud provider of choice also sends a strong message about values and one’s commitment to environmental promises.

-

Optimizing Your Own Footprint

If starting from scratch, good strategic planning and a sufficient commitment of resources can see businesses use the architecture of whichever public cloud they’ve selected to maximum efficiency, such as by leveraging auto-scaling features.

A realistic scenario may see clients paying far more for resources than they need because of imperfect designs, heavily changed usage, and the perception that they have neither the time nor money to optimize and adjust on the fly.

It can be a serious challenge to ensure your cloud resources are not underutilized or over-provisioned. Amazon Web Services (AWS), Google Cloud Platform, and Microsoft Azure all offer tools and insights to help manage and monitor resource utilization. For instance, AWS’s Trusted Advisor provides recommendations to reduce excess capacity, thereby lowering costs and reducing energy consumption. Similarly, Google Cloud’s Sustainable AI solutions aim to make AI deployments more energy-efficient.

Data storage is a critical component of cloud services, and its management can also play a role in ensuring cloud sustainability. By compressing data, deleting old or unnecessary files, and employing storage tiering, organizations can reduce their specific storage footprint and reduce its energy consumption.

As an example, Azure Blob Storage offers multiple tiers (such as Hot, Cool, Cold, Archive) that enable businesses to store data more cost-effectively based on access frequency and retention periods. This kind of management helps further optimize energy use.

-

Promoting Sustainability Via DevOps

Sustainability in the cloud begins upstream, too. By incorporating sustainability into DevOps practices, a company can work to create efficient, repeatable, and scalable environments that reduce waste from the ground up.

By automating the setup and teardown of infrastructure, businesses can minimize idle time for resources, ensuring they are in use only when needed. Cloud providers offer tools to aid in this end as well, such as AWS CloudFormation, which allows for precise control over cloud resources, enabling teams to deploy environments that match demand.

This approach not only optimizes resources, but also cuts down on the energy consumption associated with underutilized servers.

[For a more detailed look at emerging DevOps, check out our PTP Report on the DAO, a glimpse at a future of fully integrated DevOps and AI.]

-

Directly Leveraging AI

AI and machine learning are powerful tools that also be optimized for sustainability. By choosing algorithms that are energy-efficient, and scaling down to only needed computational resources when training models, businesses can minimize their environmental impact. For instance, using Google’s TensorFlow for more efficient machine learning operations can help in reducing energy use.

And as discussed above, AI use is already making cloud data centers more efficient, and this trend should only continue with greater sophistication and application.

Conclusion

One benefit of the cloud has always been efficiency at scale. Pair this with access to emerging technologies, services, and standardized security, and the cloud can offer organizations computing that might otherwise be out of reach.

But balancing these benefits with true sustainability in this new age of AI in the cloud is going to highly complex, posing a challenge for organizations, at least in the short term, just as the pressure to be more sustainable is increasingly falling on them.

Balancing this surge of demand with prudent energy use and the lowest possible carbon output requires not only a concerted effort from cloud providers, but also from their customers, who ultimately drive hyperscaler decisions with their own business.

By prioritizing greener providers, optimizing their own use for maximum efficiency, and embracing innovation, organizations can actually fulfill the promise of the cloud as a greener way to compute at scale.