Summer is here and the AIs are online. Browsing catalogs, making orders, summarizing our searches, reading and writing emails and articles (not this one). At some point AI-built websites will be primarily surfed by AIs, begging the question: how does that change web design?

According to the 2024 Imperva Threat Research report, there are now as many bots online as us, and nearly a third of all traffic comes from so-called “bad bots,” scraping the web, harvesting data, and perpetrating transaction fraud. They’re now so capable of mimicking human behavior, it’s all becoming difficult to separate.

(But people still fail CAPTCHA tests 2/3 of the time, according to Google.)

Welcome to the summer kickoff edition of our emerging AI trends 2024 bi-monthly report!

In this edition, we cover key (and interesting) events from May and June, like the Big Tech rollouts, mad growth, regulation highlights (the first state’s AI law was passed), and some of those quirky things that always abound with generative AI.

Catch up on our prior AI news roundups here:

AI Growth Continues Apace

There’s been a lot of speculation that the AI bubble would burst in 2024, but now we’re halfway through, and the end’s not yet in sight.

On June 18th, Nvidia, whose stock split 10-for-1, officially became the world’s valuable company, completing a stunning explosion of growth that’s among the fastest in US market history.

[We’ve already written about the Nvidia AI developments, and you can check them out here.]

AI has been floating the American stock market, but the entire world is investing. Saudi Arabia, seeking to complement its oil empire, created a $100 billion fund to invest in the field, with Amazon committing $5.3 billion in data centers and AI tech there. And in addition to their dedicated financial resources, Saudi Arabia has a key AI requirement which is increasingly in short supply: power.

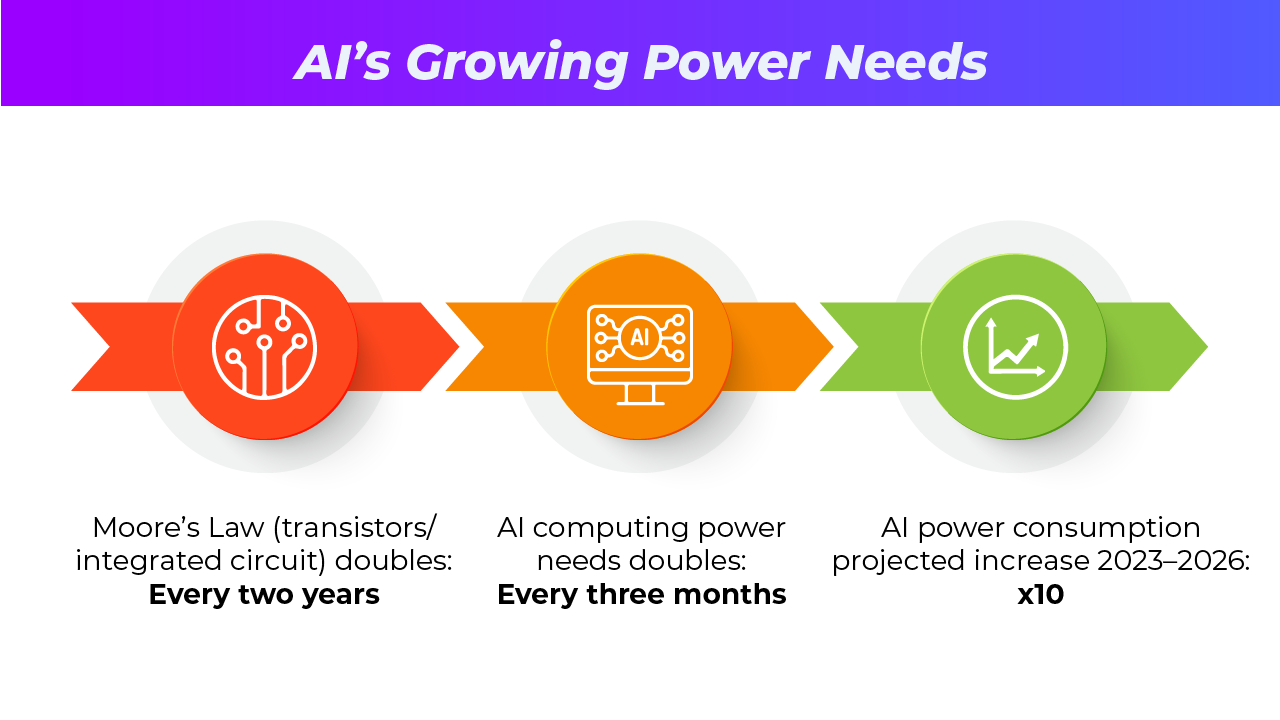

AI is now wildly outpacing Moore’s law, the observation (that’s held generally true since 1975) that transistor density in a circuit doubles every two years for around the same relative price.

As discussed in this Quanta Magazine piece profiling light-based chips as an alternative, the International Energy Agency predicts that by 2026 data centers alone will consume as much energy as all of Japan.

[For more coverage of the surge in data center construction and the power demands, check out How Green is My Cloud.]

Keep your eye on Palantir (yes, ala Lord of the Rings) Technologies, as the intelligence agency and defense-oriented big data and analytics company is finding great success in AI (their stock nearly tripling April 2023–April 2024).

Landing a $500 million contract late May with the US Department of Defense, Palantir has been leveraging their technology for private industry (United Airlines, Lear, Wendy’s) and medical centers, using its AIP platform.

Re:seeing, AI-generated video is surging forward, riding startup Luma AI advancements. Their “Dream Machine” launched in June with a free model you can try by just registering (here).

There are ever more AI video offerings out there: OpenAI’s Sora launched in February but is still not public, with Kuaishou’s Kling being the first text-to-video model to be freely released. It already has 600 million active users.

There’s also Pika, Runway, Haiper, and tools like Renderforest, HeyGen, DeepBrain, Flicki, Synthesia, and many more, and we can expect far more to come.

Big Tech Rollouts

In May and June, Big Tech had a showcase arms race, with Google’s I/O dedicated to AI, Microsoft revealing massive PC upgrades, and Apple (finally) jumping into AI in a big way, across its range of products.

Numerous articles have been devoted to each of these, but Google’s fusion of AI and search hit some serious bumps (famously returning recipes for pizza with glue, advising people to eat rocks, calling Barack Obama the first Muslim President), Microsoft’s Recall functionality was itself recalled (with enormous security holes, not to mention privacy concerns), and Apple’s release, including a partnership with OpenAI, felt like news from last year, though it was inarguably the safest of the bunch.

OpenAI, meanwhile, with deals with both Microsoft and Apple, rolled out GPT-4o, which had been blowing up the LMSYS Chatbot Arena ahead of release.

[For more on the Chatbot Arena, and how AI models are measured, check out this edition of The PTP Report.]

One of the top models across benchmarks, GPT-4o is not a full step forward, but instead made news for its voice options, being superior in visual and multi-lingual capacities. Scarlett Johansson pursued legal action against the company over the “Sky” voice, leading to it being disabled. (It was later revealed OpenAI had repeatedly sought her permission, channeling the movie Her, but she refused.)

OpenAI parted ways with the head of their internal safety team (cofounder and former board member) Ilya Sutskever, and shut down his Superalignment team altogether. While a new oversight committee will be created, it includes CEO Sam Altman, who also suggested they could shift their structure to become a for-profit corporation.

Amazon’s own AI integrations are running apace, with one example being Project PI (Private Investigator), using genAI to scan products for damage. One of a series of tools based around customer returns and quality control, PI helps flag issues and decide if they’re legit, and if damaged goods can be resold or donated.

Regulation Updates

The EU led the world with AI regulations (taking full effect in 2026), and now they’re in the thick of enforcement. During this period, regulators pressed Microsoft for documentation on Copilot usage through Bing, on the basis of potential hallucination and risk-disclosure.

In June, Meta was forced to walk-back its AI models overseas, out of fears (originating from Ireland) that they will train AI models using Facebook and Instagram user data without consent.

And Apple announced it will delay its AI Apple Intelligence launch in Europe due to regulatory concerns.

In May, South Korea hosted the latest international AI safety summit, following a pledge by 14 companies (including Google, Microsoft, and OpenAI) to use watermarking to help flag AI-generated content, ensure job creation, and work to protect vulnerable groups.

“Cooperation is not an option, it is a necessity,” Lee Jon-Ho, South Korea’s Minister of Science and technologies told Reuters.

In the United States, AI-based antitrust inquiries are underway against Nvidia, Microsoft, and OpenAI, though the position of national CTO remains open.

The first US state AI regulations also became law in May, for Colorado (also taking full effect in 2026). These require compliance from creators and users of AI both, and include a mandated, annual review for discrimination. There are requirements for risk management, systems review after modifications, and notification of customers when AI’s been used in decision making.

Like most of the state laws being considered, it also allows consumers the right to opt-out of profiling.

Conclusion

As we wrap up, here are a few other AI anecdotes from the past two months:

- AI only “thinks” when it’s writing/outputting. So prompting it to give short or direct answers (as noted by the Wharton School’s Ethan Mollick), could actually give you dumber, or less-considered results, by cutting off valuable reasoning.

- From the MIT Technology Review, a Shanghai-based company has updated a Chinese tradition of putting up portraits of recently-deceased relatives with an AI variant: a picture frame with an interactive deepfake of the recently deceased. They also produce deepfaked video calls with the recently deceased, and have had tremendous demand so far.

- Wired in June covered a human being running for mayor in Wyoming who promises, if he wins, to let every decision be made by VIC (Virtually Integrated Citizen), a ChatGPT-based mayor bot he created. And VIC’s not the only one running for office, with numerous outlets covering AI Steve, a digital avatar running for a seat in the British Parliament. If you’re interested, you can chat with Steve directly on his own website!

- The Smithsonian Magazine wrote in June about the image of a flamingo that placed (third place and People’s Vote award) in an AI-generated photography competition because it wasn’t really made by an AI. In what may be the first case of a human illegally pretending to be an AI, the artist claimed the goal was to invert the story of AIs outshining human photographers. (But in any case, it’s still against the rules.)

We hope you’ve enjoyed our coverage of May and June’s AI innovation 2024! You can expect the next installment of AI roundup to bookend summer, in late August.

References

AI Needs Enormous Computing Power. Could Light-Based Chips Help?, Quanta Magazine

Microsoft’s Copilot+ PCs Have Changed What It Means To Be An AI PC, Forbes

The truth about those annoying CAPTCHA tests, Scienceline

2024 Bad Bot Report, Imperva

‘To the Future’: Saudi Arabia Spends Big to Become an A.I. Superpower, The New York Times

Pentagon awards $480 million deal to Palantir for ‘Maven’ prototype, Reuters

Time100 Most Influential Companies 2024: Palantir, Time

I tested out a buzzy new text-to-video AI model from China, MIT Technology Review

Microsoft is reworking Recall after researchers point out its security problems, Ars Technica

Amazon’s Project PI AI looks for product defects before they ship, The Verge

Colorado Passes Groundbreaking AI Discrimination Law Impacting Employers, Forbes

OpenAI’s Chief Scientist and Co-Founder Is Leaving the Company, The New York Times

Meta pauses AI models launch in Europe due to Irish request, Reuters

Deepfakes of your dead loved ones are a booming Chinese business, MIT Technology Review

An AI Bot Is (Sort of) Running for Mayor in Wyoming, Wired

How a Real Photo of a Flamingo Snuck Into—and Won—an A.I. Art Competition, Smithsonian Magazine