The big open-source AI models are here.

With Meta’s long-anticipated Llama 3.1 hitting officially in late July, the company claims it’s the first open-source model that outperforms GPT-4o and Claude 3.5 Sonnet. CEO Mark Zuckerberg says open-source is the future, and that Meta will have the most widely used assistant by year’s end.

And it’s not the only one, with numerous large, open-source models hitting their stride in July and August 2024, such as Mistral’s Large 2, Athene-70b, Google’s Gemma 2, AI 21’s Jamba, o1-ai’s Yi and more.

Meanwhile, the big powers keep racing on. Nvidia’s earnings are expected after the bell Wednesday, with revenue projected up by some 110% again over the year, with its stock, as of this writing, up 160% for the year and 3000% over the past 5 years.

In this edition of our bi-monthly AI roundup we look at the open-source eruption and the debate around it, current consideration of some of the darker prospects around AI, check in on governance, and spotlight emerging AI trends for the months of July and August 2024.

Democratizing AI

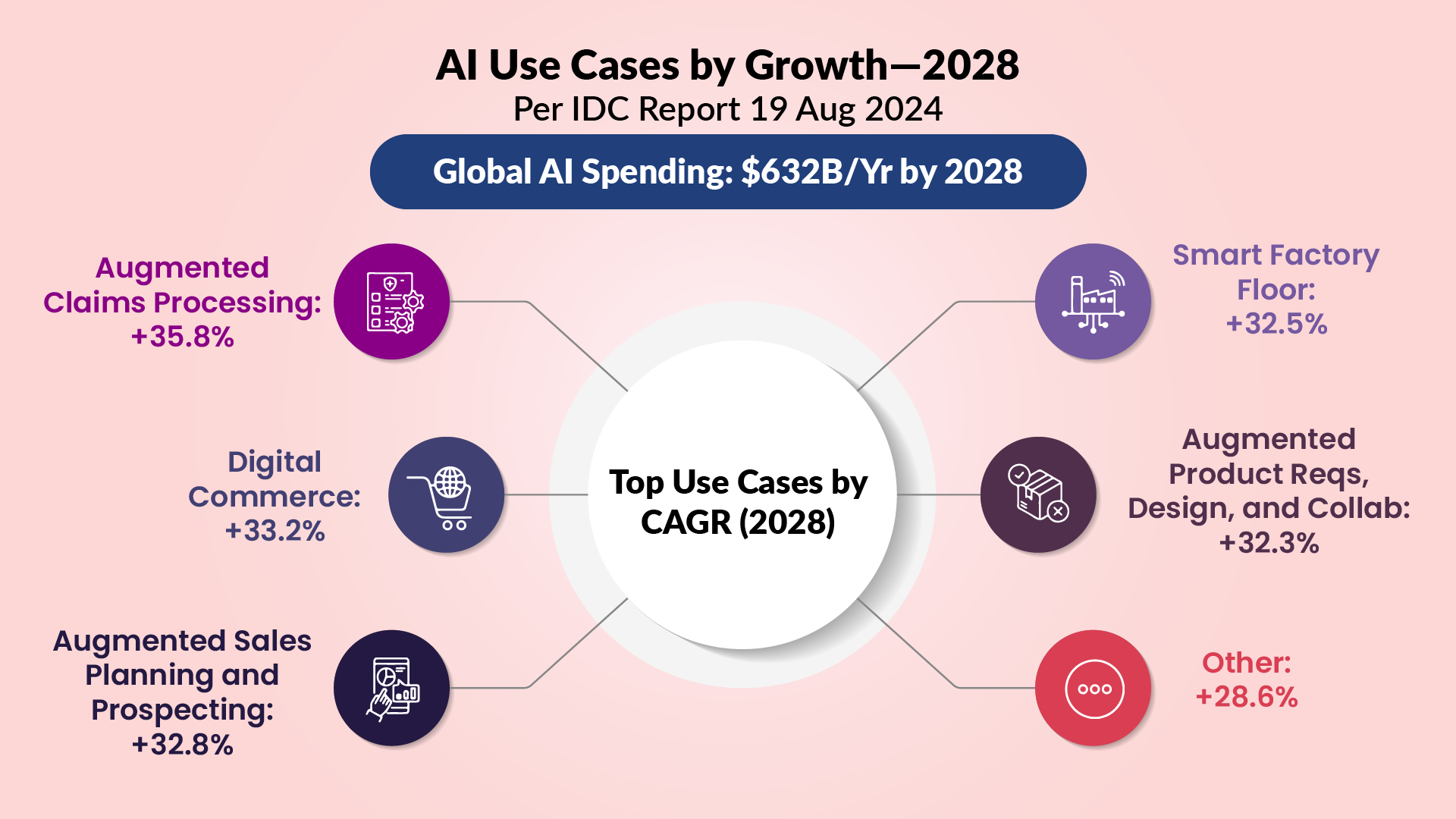

Big AI spending continues to surge, as indicated in the numbers below, with predicted use cases by growth:

But the arrival of these new open-source AI models has brought democratized AI back to the forefront of conversation, starting with how you define it.

The Open Source Initiative (OSI) gathered a panel of experts from across the field (including from Google, Meta, and Amazon) for its definition, including the requirements that to be truly open-source, an AI system must be freely available, able to be used for anything without permission, and enable the inspection of all components to study how it works. They must be able to modify it, change the output, and share with others to use for any purpose.

Many developers, like Near.AI founder (and member of Google’s Transformer 8) Illia Polosukhin argues that Meta’s Llama AI models are not truly open-source technology, as their licenses restrict use, and their training data sets aren’t fully public.

He, like several of his peers who helped discover the transformer model back in 2016, argues that open-source is essential to stave off the worst possible outcomes of AI: keeping it safe from bad actors, and the possibility that AGI might cause a perilous disruption of the economy

Polosukhin has argued that the big tech companies lack defense-level security, making the possibility of bad agents hacking their systems (including embedding so-called sleeper-agents in models that can reveal themselves at a later time) high enough to also justify openness even from a security perspective.

But the counter argument is that widely open AI at the highest levels gives cyber criminals an open door to a radically powerful (and little understood) technology, only accelerating its adoption. Without having time to fully scrutinize and understand it, many fear outcomes that haven’t even been considered (see below).

And at a national level, fears of widely open AI getting into the hands of terrorist organizations or repressive states actively drives policy.

Scary Badness

And speaking of fears, these are also still growing, among experts if not in the general population.

In terms of current applications, July saw emergence of the term “human washing” (maybe originating from AI researcher Emily Dardaman in an interview with Wired, playing on the “greenwashing” trend of businesses marketing more to environmental initiatives than actually implementing them) to describe cases where we don’t even know we’re talking to an AI, with companies playing up the human benefits at the same time.

Without more clarity and regulation here, all sorts of ramifications arise (human-sounding AI may be nicer to talk to, but shouldn’t we know when we’re not talking to a person?), exacerbated in examples like Perplexity, which has drawn fire (including from Forbes’ chief content officer Randall Lane) for going beyond training in using stolen data, directly repurposing it without attribution in response to prompts.

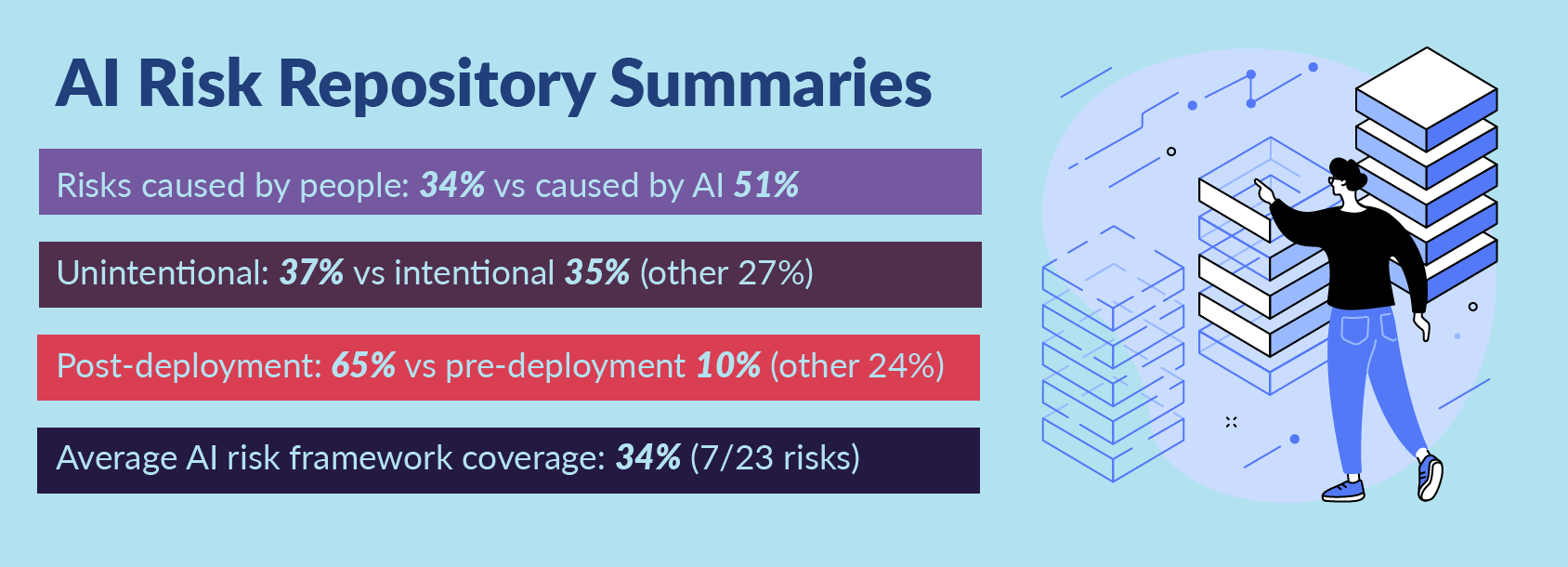

Considering where AI can go wrong is the basis behind sites like the AI Incident Database, an AI “repository of problems experienced in the real world” for researchers and developers, and MIT’s AI Risk Repository, which charts potential AI risks in the effort to help industry and academia alike to better understand the full picture.

From their analysis of the findings, they discovered that:

In these cases, the chronicling of AI failures and risks isn’t fearmongering, but instead aimed at uncovering the worst outcomes possible so that systems in the future can be better aligned, and regulators can better address actual risk cases, preventing so-called doomer scenarios from ever coming to fruition.

Governance, Hallucination, and Global Developments

July and August had news on all of these issues, and The PTP Report has you covered on these AI industry updates, with recent articles in depth on:

- The emergence of RAG and current work to curb hallucination

- European attempts to lead with regulating AI as in other areas

- The international scramble for AI relevance, including the US effort to contain Chinese AI development

Additional news here includes:

- A US judge in early August ruled Google maintained an illegal monopoly in search, a decision that is likely to trigger significant impact across Big Tech, as investigations and suits are already underway against Apple, Amazon, Meta, and Nvidia.

- California’s SB-1047 (“Safe and Secure Innovation for Frontier Artificial Intelligence Models Act”) passed the state senate in May, triggering serious concern from the industry centered there. In August, the bill saw significant amendments lessening its impact, in part with input from Anthropic. The state can still sue for a catastrophic event, but can only request cessation of dangerous operations beforehand, and companies no longer need to submit certifications of safety, but rather outline their safety practices. Like many European regulations, this bill also would now exclude smaller, open-source model users (those spending less than $10 million tuning a covered model). It’s expected for a final vote shortly.

Coming Soon

Search is rapidly becoming AI’s domain, with OpenAI entering the game to challenge Google. One issue here: according to a Goldman Sachs report, ChatGPT searches average 10 times the power use of a conventional web search. Also in their report: AI is now expected to drive a 160% increase in data center power needs.

Still, this change is part of a broader move to AI agents, one of the early AI predictions for systems that go beyond chatbots and actually make decisions to attain a user’s goal.

Eric Schmidt, former Google CEO, (in talking to students at Stanford in a widely shared Youtube video, and via an interview with Noema), includes this in his own AI insights—on the three things he believes will combine to radically change our world in the near term:

- Very long context windows: New techniques allow going from a million words to nearly infinite context windows, which can enable systems to loop back into themselves or other systems.

- Agents that can actually learn things: Ask your agent to learn a skill, for example, and it will soon be able to consume volumes of necessary textbooks and retain this knowledge. Combined with the above, you have radically improved chain of thought reasoning (ala recipes or procedures with thousands or more steps) to achieve what you need, helping an AI take two and three steps on its own.

- Text-to-action: Asking the AI to do something—such as write a program in a certain language that accomplishes a set of requirements, and it will be able to do it, implement, and test the results.

This combination, Schmidt asserts, is not far off (“the next few years,” closer to 5 than 10), transforming AI systems with exponentially more power and use.

OpenAI’s secretive “Strawberry” project, reported on by Reuters in mid-July, is well into development and goes beyond answering user queries, instead performing what OpenAI calls “deep research” by using the internet autonomously, likely some progress on the steps above.

For professionals, a new field is continuing to emerge called LLMOps, focused on the specialized needs of AI models. This can include ensuring optimization, reliability, safety, privacy, and compliance, handling necessary AI testing, and in the short term (as it lasts) prompt writing.

While the field isn’t new, it continues to shift and evolve, entailing different tasks at different companies.

Conclusion

Back on the subject of open-source: Illia Polosukhin’s NEAR promises the arrival user-owned AI, fusing blockchain and AI technological innovations together. The goal is to help solve what he sees as one of the critical issues of the AI at scale debate—access to quality data—by enabling collaboration and sharing among developers and users. This means that data use can be unveiled, with microtransactions that can potentially allow content creators to be compensated as their work is used.

They currently make use of open-source models, like many smaller players, which took a massive step forward in this period. Of course, progress is surging forward elsewhere, too, meaning the gap between the open and closed models will not remain this close for long.

Catch up on our prior AI news roundups here:

We hope you’ve enjoyed our coverage of the latest breakthroughs in AI technologies for July and August 2024—expect our next edition around Halloween, hopefully devoid of AI-related horror stories.

References

Meta releases the biggest and best open-source AI model yet, The Verge

We finally have a definition for open-source AI, MIT Technology Review

The Blurred Reality of AI’s ‘Human-Washing’, Wired

Forbes presents publisher worries about AI, Editor & Publisher

‘Google Is a Monopolist,’ Judge Rules in Landmark Antitrust Case, The New York Times

Inside the fight over California’s new AI bill, Vox

AI is poised to drive 160% increase in data center power demand, Goldman Sachs

Mapping AI’s Rapid Advance, Noema Magazine

Exclusive: OpenAI working on new reasoning technology under code name ‘Strawberry’, Reuters