Recruiters, hiring managers, or anyone who reviews resumes either already has or will someday come across a fake candidate. This may be someone who lied on their resume or even went as far as to use a completely fraudulent identity. This has only gotten more common since the rise of remote work due to the pandemic, and now the introduction of AI poses even more uncertainty.

Now, why does this happen? The answer is twofold: They may just want to get hired, or they want to cause harm to the company. Most times, though, the goal is to just get hired at all costs.

Recruiters see fake candidates all the time. According to a survey of recruiters and hiring managers by Checkster, 77.6% of respondents encountered candidates who were misrepresenting themselves to a moderate or greater level throughout the hiring process. Another study found that 64.2% of Americans have lied about their personal details, skills, experience, or references on their resumes at least once. This can become worrisome for recruiters for obvious reasons. Even the FBI has gotten involved due to an increase in candidates using deepfake technology, and, with advancements in AI making it increasingly difficult to detect fake candidates, there is reason to believe this trend will only grow.

Where AI Comes into Play

AI has provided many benefits for HR professionals, especially around the hiring process, but it can also cause problems that only further complicate things.

Consider this situation: You’re a recruiter assessing seven job candidates, but six of them have very similar resumes. Coincidence? With GenAI becoming practically synonymous with resumes, it isn’t so far-fetched to consider that many candidates are simply pasting job descriptions into ChatGPT and asking it to create the resume for the ideal candidate for the role, which risks bringing in inaccurate information, creating bogus statistics, and even data from another person’s resume who also used AI. And if job seekers are applying to jobs in high volumes, they likely aren’t taking the time to tweak and personalize the output.

The Deepfake Problem

Deepfake technology is another scary contributor to this problem. This has come into play ever since the rise of virtual interviews, especially in the IT industry due to the high volume of remote jobs. The result: convincing synthetic media used by fraudulent candidates to hide their identities during video interviews.

The FBI has even issued warnings about a scheme in which fraudulent candidates apply for remote-work positions and use deepfake technology to hide their identity during the video interview process. In fact, 35% of US businesses have experienced a deepfake security incident in the last 12 months, ranking the second most common cybersecurity incident in the country.

A similar incident happened with a North Korean hacker who altered his photo to look more American so he could get hired by a U.S security vender. In this instance, the hacker enhanced his photo with AI and immediately proceeded to load malware.

[Make sure to check out The PTP Report cybersecurity coverage to learn about how companies can protect themselves.]

How AI Can Also Help Detect Fake Candidates

Global market research company Forrester Research predicts that at least one high-profile company will hire a nonexistent job candidate next year. Stats like these force top companies to act. For example, recruiters are being forced to employ retaliatory, protective AI in response to the use of bots and generative AI by candidates.

The good news is that there are ways in which AI can help prevent these fake candidates before they’re hired. According to a late-2023 IBM survey of more than 8,500 global IT professionals, 42% of companies were already using AI screening to improve recruiting and human resources. AI recruiting tech could greatly help recruiters, especially by limiting the number of fake candidates that make it through.

In addition, AI can help detect which resumes were written by AI. Spotting things like typos, keyword stuffing, and formatting errors are a good first step in identifying a fake candidate. Some advanced resume clues may consist of inconsistencies or unusual job transitions. AI can even scan the candidate’s LinkedIn to make sure it is legitimate.

AI-powered interview tools use voice and facial expression analysis to determine a candidate’s tone, demeanor, and emotional state. These tools, such as emotion AI, can even monitor the candidate’s eye contact to determine if they are reading from something during the online interview process. AI is beneficial but there should also be a mix between human and AI involvement. For instance, AI can handle initial screening and data analysis, while humans can take charge of interactions and judgment calls that require emotional intelligence and intuition.

Glider AI is another tool that can successfully identify and prevent candidates from progressing to the interview stage due to fraudulent activities.

To fight AI use in interview calls, employers could also deploy AI-powered deepfake detection technology to help identify and flag potential threats.

[For more information on cybersecurity threats and defenses, check out PTP Report]

Conclusion

It is imperative that companies are aware of the ways fake candidates operate. As AI gets more advanced, there will be new ways fake candidates try to get hired. The resume screening process is already more efficient with AI—a recruiter, on average, spends 23 hours screening resumes for a single hire—but companies must also be aware of deepfake technology.

The good news is that AI can also help companies fight against fake candidates. Using AI, they can detect if a resume was written by AI. AI-powered interview tools also help fight against candidates that use deepfake technology or candidates who read from a script.

But it is still important for companies to find a balance between human and AI involvement in the recruiting process. Interviews that take place in person will always be superior because there is an opportunity to connect with a candidate in a more meaningful way.

Fake candidates can be a real nuisance and possible threat so companies should take the proper precautions when hiring to make sure the candidate is real. Preventive measures such as improved checking of candidate identification and information, technical assignment and detailed questions, and visual screening methods could greatly reduce the number of fake candidates companies encounter.

References

AI hiring tools may be filtering out the best job applicants, BBC

Beware of fake job candidates, LeadDev

Deepfakes Are Being Used to Fake Job Interviews, AI, Data and Analysis Network

Deepfake Scams Expose Employers to Big Risks, SHRM

Fake Candidates, hallucinated jobs: How AI could poison online hiring, Fast Company

Fake Candidates: 8 Red Flags Recruiters Should Look Out For, NPA Worldwide

How Job Candidates Are Using AI to Create Fake Resumes, Core Matters

How we used Glider AI to combat candidate fraud for a financial services client, Pontoon Solutions

North Korean hacker got hired by US security vendor, immediately loaded malware, ARS Technica

The Growing Problem of Fake Job Candidates, The Ash Group Newsletter

The Peril of Fake Job Candidates in the Technology and IT Industry, Macrosoft Inc

Will Employers Be Duped by Job Applicants Using AI?, Eanet, PC

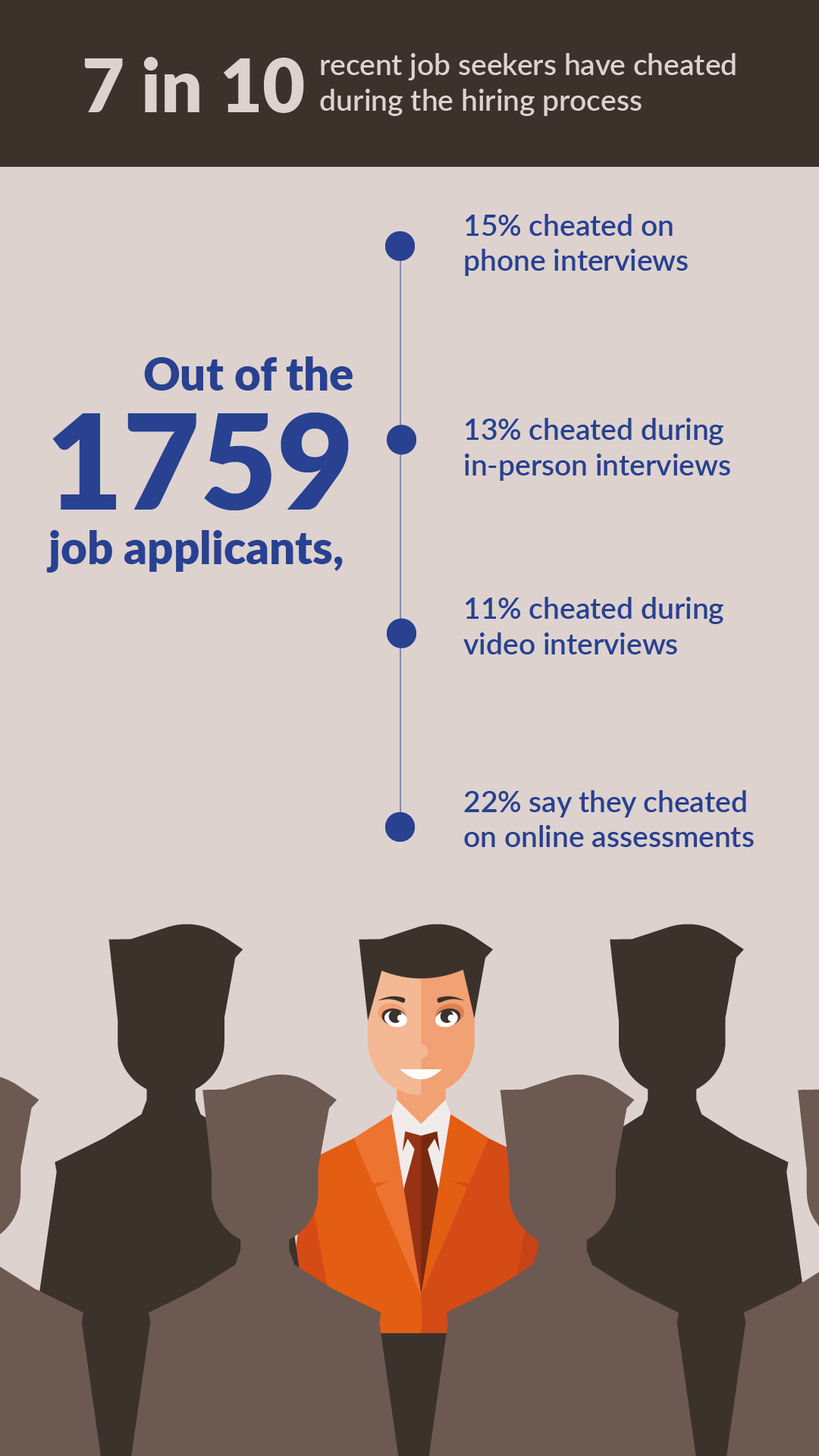

7 in 10 Recent Job Seekers Cheated During the Hiring Process, ResumeTemplates