It’s after Halloween and time for our bi-monthly roundup of the latest AI news! If you gorged on candy or filled your lawn with gigantic skeletons, you might be feeling a letdown, but no such letdown’s happening for the AI giants as we near the close of 2024.

With many of the Q3 earnings just in, the monstrous expenses (big even by the standards of normal capital expenditures in tech) continue apace.

Last time out, we hit on the arrival of the big open-source players, but this time, it’s back to the closed systems, the hyperscalers and big tech innovators, who’ve been investing in build-out, rolling out new systems, and readying AI agents to erupt on the scene. We check in on the constantly shifting world of AI regulations and look at some misfires during the period.

And if you need to catch up on our prior roundups of AI advancements in 2024 to date, you can do so below:

Eye on the Giants

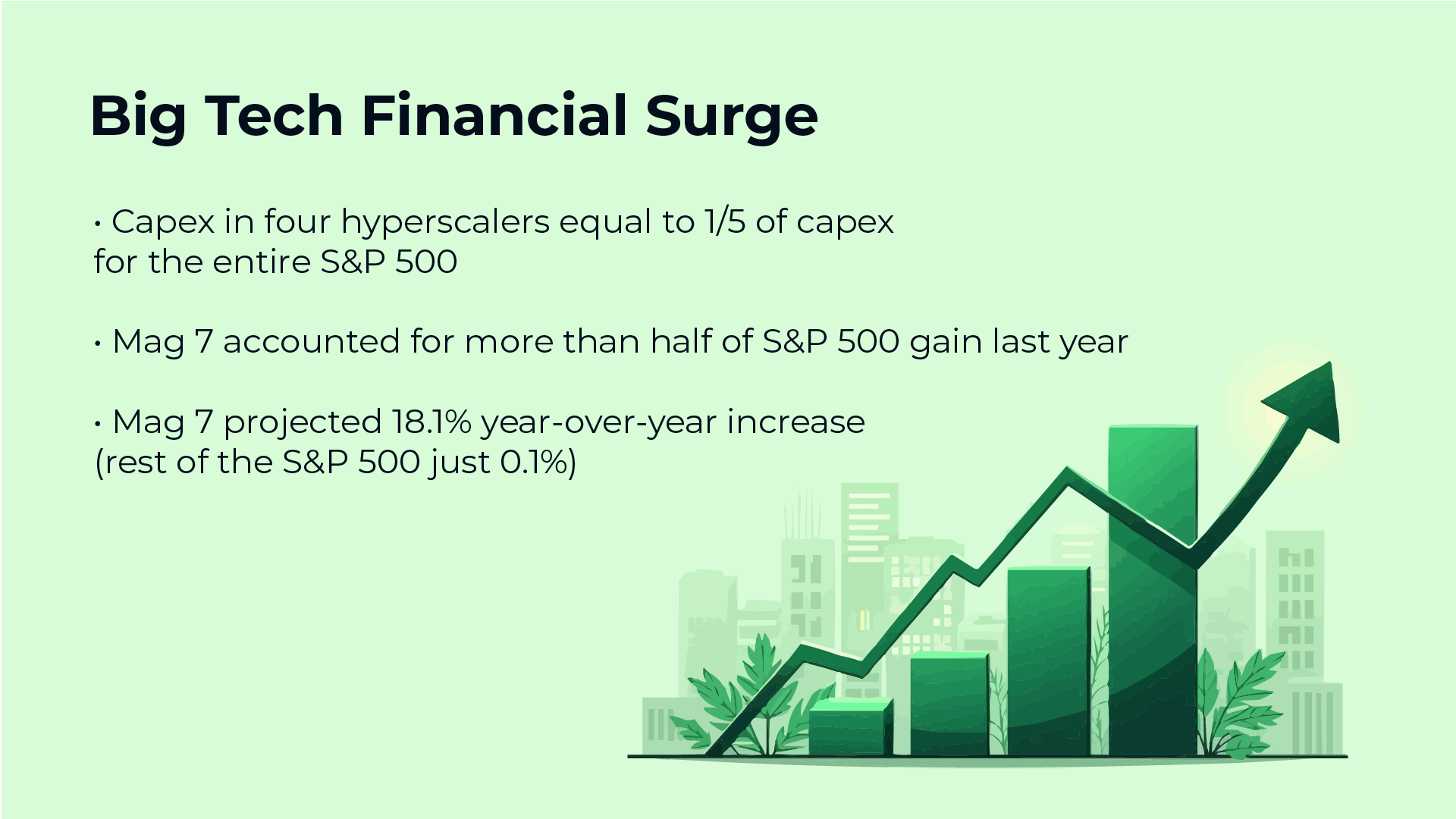

With the AI infrastructure buildout continuing to surge, let’s open with some numbers:

The biggest tech players, especially the hyperscalers—Google, Microsoft, and Amazon—are leading in investments, with combined spending that outpaces many top U.S. companies.

As earnings came in at the end of October, each of the big players both exceeded expectations in performance and continued their buildout with no signs of slowing down.

However, markets have shown some chilling, at least for now, possibly due to concerns that AI development costs could impact short-term profits, as seen with Microsoft and Meta.

And speaking of buildout, one potential bottleneck to data center scaling is energy (as we’ve covered previously) sending the major players moving aggressively after nuclear power for AI, aiming to keep their scaling operations on track.

Microsoft signed a deal to get power from Three Mile Island, site of the worst nuclear accident in US history. Under the new name of the Crane Clean Energy Center, undamaged parts will be re-opened, while Amazon has made a similar deal with Talen Energy.

In mid-October, Google joined the fray with Kairos Power, planning on nuclear power from seven smaller, modular reactors currently in development. Amazon did likewise with X-Energy.

These modular reactors aren’t expected to be online until 2030 but are geared to be faster to get on their feet and cheaper to operate.

This push comes after Microsoft and Google missed their goal of having zero net emissions for the first time, with AI as the main culprit.

And while the efficiency of AI systems is expected to improve as they develop, usage is also scaling up. With one ChatGPT query estimated to draw as much power as a lightbulb needs for 20 minutes, it’s easy to see how data centers worldwide are expected to consume as much energy as the entire nation of Japan by 2026, according to the International Energy Agency.

New Products

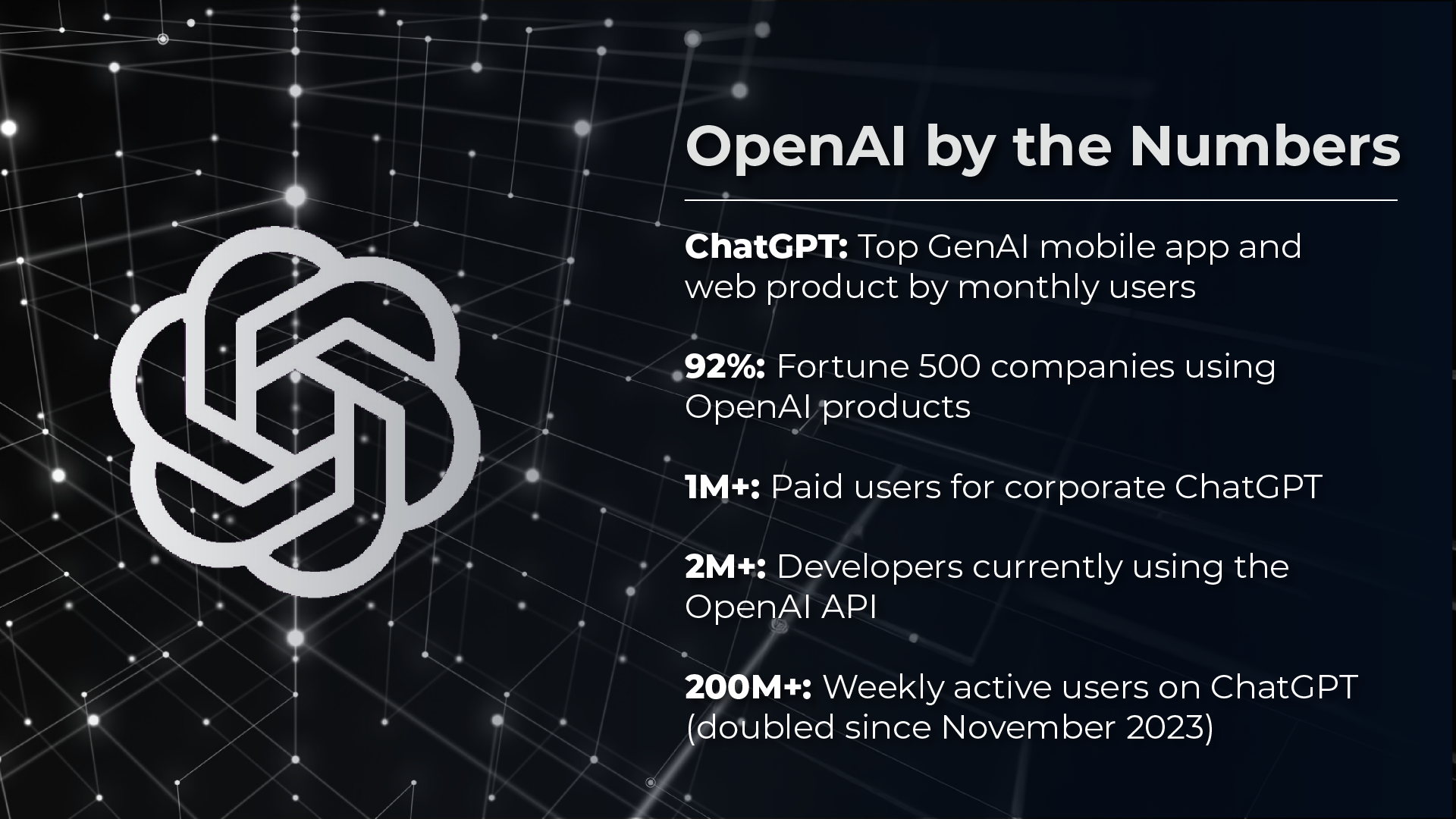

Speaking of usage, OpenAI—now valued above $100 billion after landing the largest venture capital deal in history in October ($6.6B)—remains the king.

OpenAI was in the news for launching its highly secretive Strawberry/Q* project in September, an update in so-called AI reasoning representing a significant step forward in math functionality and software coding. Released as o1 (in preview and mini now), it follows on similar innovations made at Google.

[Read our discussion of Google’s innovations in this technology here. Our CEO has also written on analyses of o1’s functionality on his Substack.]

The Verge broke news on the Orion AI release, the first new model from OpenAI since Strawberry. Reportedly due out before the end of 2024 (with Microsoft hosting it on Azure first), it may be offered to companies first for developing their own products. Despite rumors of Orion being far more powerful than GPT-4 and wholly separate from the o1 reasoning model, OpenAI itself has denied the reports (much as it did with Strawberry/Q*).

AI agents are being heralded as the next big step, or AI systems built for specific purposes, capable of accessing live data, and accomplishing tasks with some autonomy.

Salesforce has gone all in on agents, which it calls “the third wave” of AI. They’ve rolled out Agentforce, going above and beyond chatbots to get tasks done autonomously. Their agents have five distinct attributes (role, data, actions, guardrails, channels) and work in the following areas:

- Service Agent for customer service

- Campaign Optimizer for full campaign management

- Personal Shopper for products and product queries

- Buyer for B2B customers, with order tracking and more

- Merchant for site-related tasks

- Sales Development Representative (SDR) to reach out to leads

- Sales Coach for sales team training

Microsoft is also rolling out agent technology in Copilot Plus, now capable of running multiple LLMs (offerings from Anthropic in addition to OpenAI).

As discussed in a prior post, early projects boast significant improvements for things like onboarding, drafting legal documentation, and writing code.

Apple’s AI implementations are geared toward being the safest and most private, with rollouts of “Apple Intelligence” happening now across the board on their products, as another big player entered the ring in force with the IBM Granite 3.0 rollout.

This newest model targets enterprises with data-heavy use requiring extensive computational power, from healthcare to finance to supply chain management, performing well in measures of analytics and automation.

While our last edition marked the arrival of great advances in open-source AI, this time it’s the big players putting their money down.

AI Regulation Updates

In addition to investments and rollouts, some developments at the big tech companies are now being classed as national treasures by the latest US government AI regulations. The Department of Commerce’s National Institute of Standards and Technology (NIST) formalized a deal with OpenAI and Anthropic to collaborate on research, safety, and testing.

In the first US national security memorandum on AI (38 pages and an appendix were made public in late October), focuses include:

- Guardrails for use by the defense agencies, especially regarding decision-making, target selection, asylum, tracking by race/ethnicity, etc.

- Annual reports are required for the President on risks for aiding in development of chemical and biological weapons, such from the Energy Department on nuclear

- Treatment of closed-model public sector systems as national assets, in terms of protection from other nations, including providing these companies updated assistance for protection against AI security risks

- Directing the AI Safety Institute (part of NIST) to inspect new AI tools to ensure they can’t aid terrorist organizations (as part of the NIST AI collaboration discussed above, also providing them access to models before and after release)

- Describing efforts to bring the best AI minds to the US, akin to the development of atomic weapons during the Cold War

In California, 18 laws have been passed that regulate AI—including coverage of development transparency, risk assessment, healthcare, privacy, deepfakes, and AI literacy—though the most publicized and contentious of the bills, SB-1047, was vetoed by Governor Gavin Newsom in September.

In Europe, leadership changes in some of the EU’s top regulatory posts may also signal a change in approach, with the figure most associated with a bevy of big tech fines—Thierry Breton—among those stepping aside.

The slate of AI regulations remains in place, but this could reflect a change in how they’re enforced going forward.

[For more on Europe’s AI regulations, check out this edition of The PTP Report.]

Woes

No new technology sails through without hiccups, and AI has seen plenty, especially considering the scale and speed of its adoption.

Some stories from this period include:

- Perplexity, the AI search engine startup, came under fire from numerous media outlets both for copyright infringements—scraping and displaying content without permission or even proper attribution—and for hallucinations getting mixed in with real reporting.

- A pair of Harvard students modified Meta’s Smart Glasses to turn them into facial recognition scanners, capable of moving person to person and bringing up names, addresses, phone numbers and more. As reported by 404 Media, the duo hasn’t released their code and created the project to raise awareness of serious AI ethics concerns with how easily these tools can be modified for nefarious purposes.

- Continued stories abound of students being falsely accused of cheating due to AI-checker failures (or failed usage). This includes the first known lawsuit of its kind being filed on behalf of a high school student in Massachusetts. While AI tools are in wide usage by students (the Digital Education Council estimates 54% of higher ed students use AI weekly for schoolwork in 2024), detecting AI writing from human remains stickier.

- And in a case that’s maybe about AI doing its job TOO well, The Washington Post reported a story in early October of AI transcription run amok: after a zoom call with potential investors was finished, private chatter by the company was picked up and automatically emailed in the minutes. This discussion included internal failures and even manipulated numbers, and ultimately killed the deal.

Conclusion

This concludes our coverage of AI breakthroughs and developments from September and October 2024. We hope you’ve found it useful in keeping up to date with the frantic pace of change.

Expect the next roundup at year’s end, as we peer around the corner to what’s in store for AI in 2025!

[If you’re on the lookout for your own AI experts, or are one yourself, consider PTP.]

References

OpenAI says ChatGPT usage has doubled since last year, Axios

The Top 100 GenAI Consumer Apps, Andreesen Horowitz

Nuclear Power Is the New A.I. Trade. What Could Possibly Go Wrong?, The New York Times

A Lawsuit Against Perplexity Calls Out Fake News AI Hallucinations, Wired

AI brings soaring emissions for Google and Microsoft, a major contributor to climate change, NPR

The Crunchbase Megadeals Board, Crunchbase News

OpenAI plans to release its next big AI model by December, The Verge

Meet Agentforce, Salesforce’s autonomous AI answer to employee burnout, ZDNet

Biden Administration Outlines Government ‘Guardrails’ for A.I. Tools, The New York Times

U.S. AI Safety Institute Signs Agreements Regarding AI Safety Research, Testing and Evaluation With Anthropic and OpenAI, NIST

Big Tech’s New Adversaries in Europe, Wired

AI assistants are blabbing our embarrassing work secrets, The Washington Post