When news broke in October that two Harvard students had created a pair of real-time facial recognition glasses, the most surprising thing was how easy it was.

While they withheld their code, they did share videos of the glasses in action, which, like something out of James Bond or Mission Impossible, allowed the wearer to pull names, contact information, and other personal details from people, even on mass transit, simply by looking at them.

Adapted from off-the-shelf Ray-Ban Meta smart glasses, they live streamed to Instagram and used their program to match faces with AI. Then it was as simple as looking up publicly available databases and sending the results back via a phone app.

The alarming ease of this conversion points both to the incredible capabilities and grave data privacy risks in this sudden explosion of AI tech.

For businesses, the power of automation is already impressive and improving at a startling rate. (In case you missed it, Yann LeCun, longtime AGI skeptic is the latest to say we can potentially have it within 5–10 years, in a recent interview with Nikhil Kamath.)

But at the same time, the speed of advancement, coming with the integration of numerous systems presenting black box AI risks, begs the question: How do you harness this power without exposing user data to cybercrime, misuse, or regulatory scrutiny?

As we move to 2025, this balancing act between AI and data privacy regulations is proving increasingly challenging.

In this PTP Report, we look at AI integration and data access risks, considering potential strategies for staying ahead of the curve.

With an architecture geared around data privacy, denormalizing unnecessary data collection, and embracing the union of AI and zero-trust architecture, it doesn’t have to be a choice between falling behind or a future of great risk.

AI and Data: Black Boxes and Known Unknowns

One of the continually high-cost aspects of AI is its reliance on data. It needs good, clean data, and this usually comes from either publicly available, proprietary, or purchased sources. For predictive AI that’s specified by use case, you need more and more specific data for the domain in question.

With search, email, artwork, analytics, hiring, coding, and more becoming common uses, AI systems are hungry for ever more collection across the spectrum. And sources are increasingly available, too, from our multitude of transactions and online actions, IoT device and sensor inputs, spanning uses from appliances to fitness to healthcare, camera monitoring, thermostats, entertainment options, and more.

[For a look at emerging cybersecurity risks in AI, such as prompt injection, check out this edition of our Cybersecurity Roundup.]

Former Harvard Business School professor Shoshana Zuboff coined the term “surveillance capitalism” in consideration of this process by which value is taken from our digital behavior, either through direct tracking or third-party systems. And AI’s need for data and capacity to generate automation and insights from it only intensifies the struggle.

The temptation to harness AI across these sources is incredible, and yet so are the risks. From increasing limits on how personal data is collected and maintained (Italy initially banned ChatGPT over such concerns) to the possibilities of systems spitting back out personally identifiable information (PII), the danger to businesses is not only potentially severe but also frustratingly enigmatic.

How can anyone be sure how the data they use is being processed? European regulations, for example, routinely hand out fines in the billions, but even costlier for businesses is the damage to their reputation from such failures.

[For more on AI and GDPR compliance, check out this edition of The PTP Report.]

And the risks go beyond personal privacy or exposure and direct damage to businesses themselves. With the reliance on so much data, AI systems are also almost invariably biased, based on commonalities in available data sources which reinforce and perpetrate existing stereotypes.

Data issues skew decision-making, leading to biases from hiring to language capacities to representation to even more trivial things, such as avatar accents, as examples.

Strategies for AI Data Privacy

Privacy engineering is increasingly emerging as its own field, and with the increasing implementation of AI systems that work at great scale and cost (and often less than ideal transparency and understanding), the need for this specialty only continues to grow.

Whether it’s about ensuring compliance with privacy policies, industry regulations, or law, these practices can save organizations billions of dollars by ensuring a focus on protecting data from the start.

Recommended privacy engineering and AI data protection techniques include:

- Anonymizing, minimizing, and encrypting data as much as possible

- Applying a zero-trust approach

- Good practice with audits, access control, and transparency

- Leveraging federated learning

- Designing systems for privacy from the ground up

Let’s look at these in a little more detail:

Data Anonymization

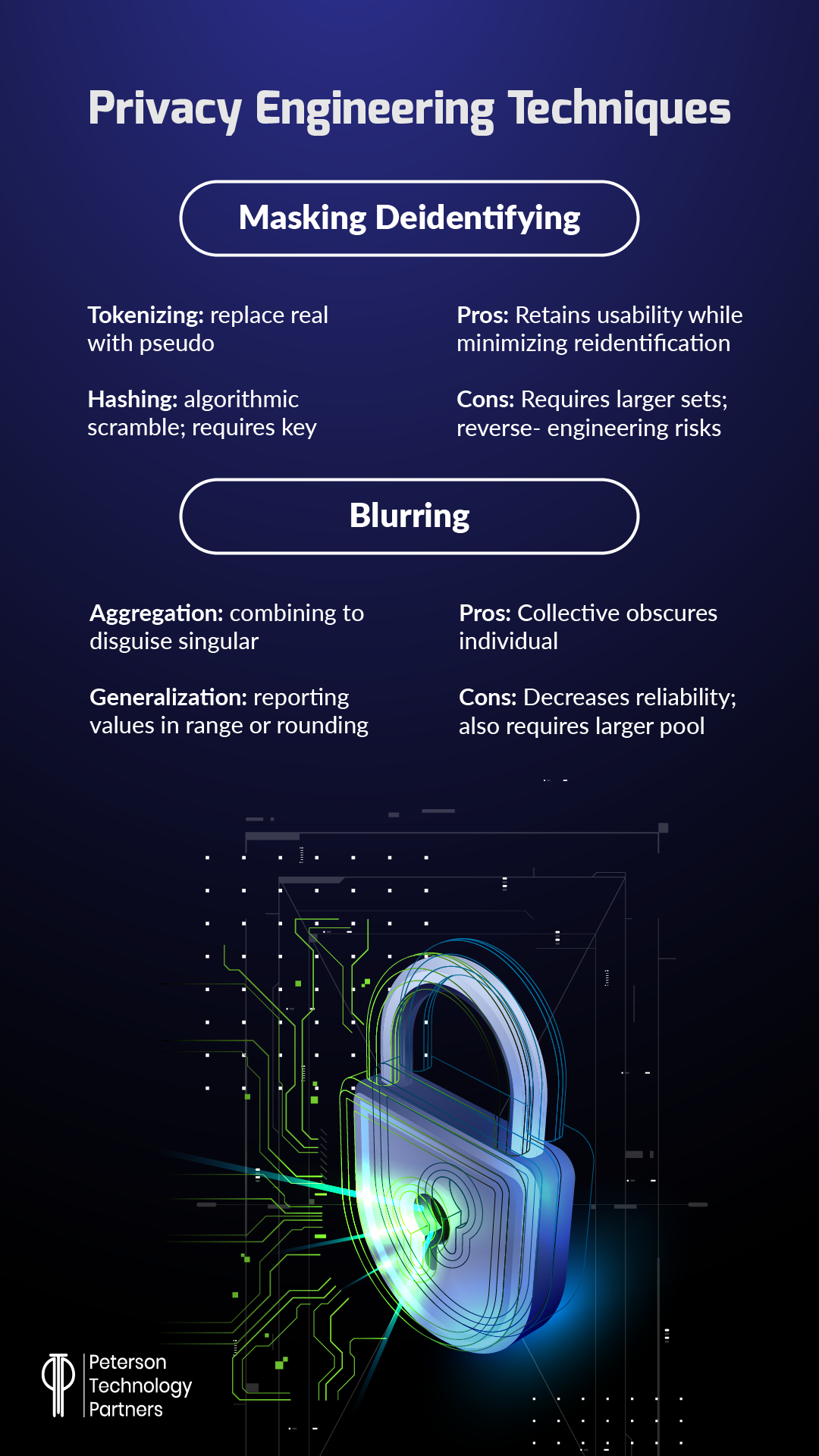

Where possible, this protection begins by removing key identifiers, and there are various ways to go about it practically, from masking to generalization:

Source: Privacy Engineering by Joseph Williams and Lisa Nee

This can be strengthened with a goal of minimizing collection and storage where it’s not needed, especially in use with AI and machine learning systems.

An approach recommended by Stanford University’s February 2024 paper Rethinking Privacy in the AI Era is to change our long-standing practice of collecting by default.

Instead of opt-out, they recommend focusing on meaningful collection and consent with an opt-in approach, establishing a “privacy by default” basis.

In addition to these approaches, introducing noise into datasets, such as through differential privacy, can further limit deidentification risks.

Zero Trust Approaches

Zero-trust security is something we at PTP have written about, and worked on implementing, quite a bit. We won’t repeat too much here, aside from saying the basis is to assume no entity has a right to be in any part of a system at a given time.

This also holds for AI systems, which need to be rigorously checked using the same standards.

[For help on setting up your own zero trust architecture, contact PTP for the best onsite or remote cybersecurity experts.]

On AI Model Security

In addition to thoroughly scrutinizing data handling before considering an AI provider, the pace of change and uncertainties also dictate regular, ongoing audits of these systems. This includes a company’s own AI data security best practices but also access controls and encryption.

Transparency with AI can be a challenge, with developers like Anthropic and Google still working to understand how their own neural networks work in detail. But one area transparency is possible is through carefully tracking data and disclosing AI use. Companies can enhance trust overall by disclosing all AI use, a process that’s also increasingly central to emerging regulations.

Federated Learning

Aka collaborative learning, this approach to machine learning decentralizes data used for training. This means that AI models can be trained without having to exchange potentially sensitive data. The data is left on local devices, limiting exposure.

[Take a look at Pete & Gabi to learn more about how PTP protects client data with AI systems.]

Designing for Privacy

Aside from a broader zero trust approach and anonymization, “privacy vaults” (such as those used by Google, Netflix, Apple, and Microsoft) are a form of data segregation that focus most on the highest value/greatest risk data.

This approach separates and maintains PII away from less sensitive data, aiming to maintain usability while maximizing protection.

This means any access of PII has to go through the vault, and breaches that might gain access to other components cannot move laterally to access higher risk data.

With a separation of what’s allowed the AI and privacy vaults, greater control is maintained on elements that could potentially de-identify datasets.

Conclusion

Today collecting data may be the easiest part of the data lifecycle for many businesses.

With added regulation, rising cybercrime, and the opacity of data-hungry technologies like AI, it’s increasing essential that organizations gain and maintain control of exactly what’s being collected, stored, and shared, even to internal systems and third-party providers.

It’s about more than just responsible and ethical business practices—it’s also a financial and reputational necessity.

References

Someone Put Facial Recognition Tech onto Meta’s Smart Glasses to Instantly Dox Strangers, 404 Media

Privacy Engineering, Computer (vol. 55, no. 10)

Rethinking Privacy in the AI Era, Stanford University

User Privacy Harms and Risks in Conversational AI: A Proposed Framework, arXiv:2402.09716v1 [cs.HC] 15 Feb 2024

ChatGPT banned in Italy over privacy concerns, BBC

AI and data privacy: Strategies for securing data privacy in AI models, InData Labs