In many ways, the digital world is already more important than the physical one. We use it to engage with coworkers, get work done, get paid, pay bills, socialize, consume media.

But one area where it is unquestionably not more important is healthcare. We’re still flesh and blood and without some consciousness upload (or maybe neurolink backup) we will continue to be. We get sick and injured, and we need real-world treatment.

As an industry, healthcare is also heavily regulated, overloaded by need and often under-supported by available workers and finances, making it an area desperate to seize on the improved efficiencies promised by digital transformation.

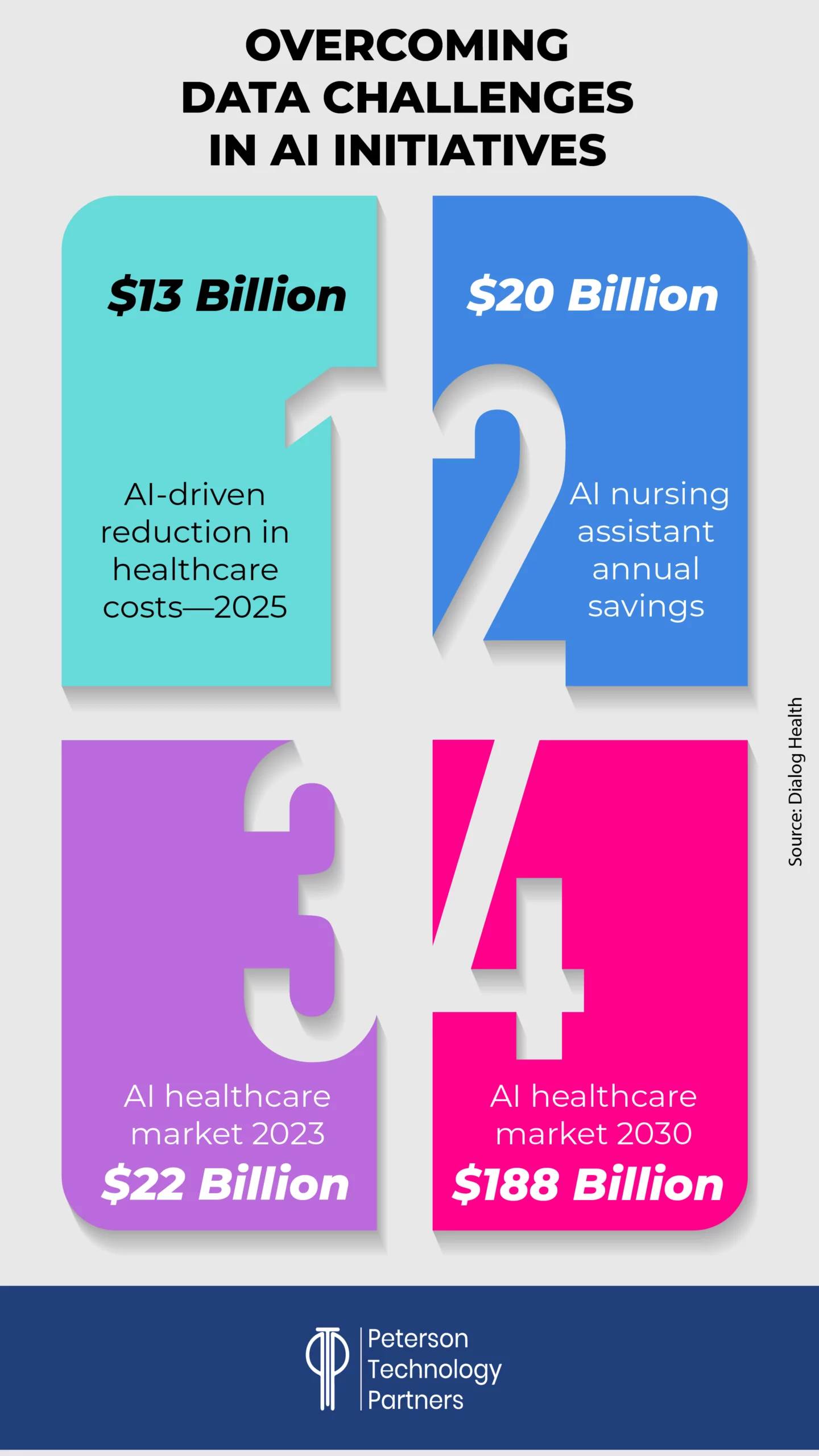

And with AI solutions reportedly able to rule out heart attacks twice as fast as humans alone (with 99.6% accuracy), already assisting in surgeries to an extent that is expected to save some $40 billion annually, and providing some $20 billion in annual savings through nursing assistance alone, it’s no surprise that 90% of hospitals are expected to adopt AI technology in 2025—just for early diagnosis and remote patient monitoring.

In the first of our AI Works series that will focus on AI adoption within specific industries, this week’s PTP Report dives into AI-driven healthcare innovation, looking at how it’s being used for listening, transcribing, analyzing, suggesting possible diagnoses, scheduling, securing, and making documentation accessible—reshaping everything from surgery to diagnostics to administrative work.

We’ll take a look at where it’s excelling, where it’s failing, and what all this may mean for the future of medicine.

Who’s Better: Human or AI for Medical Diagnosis?

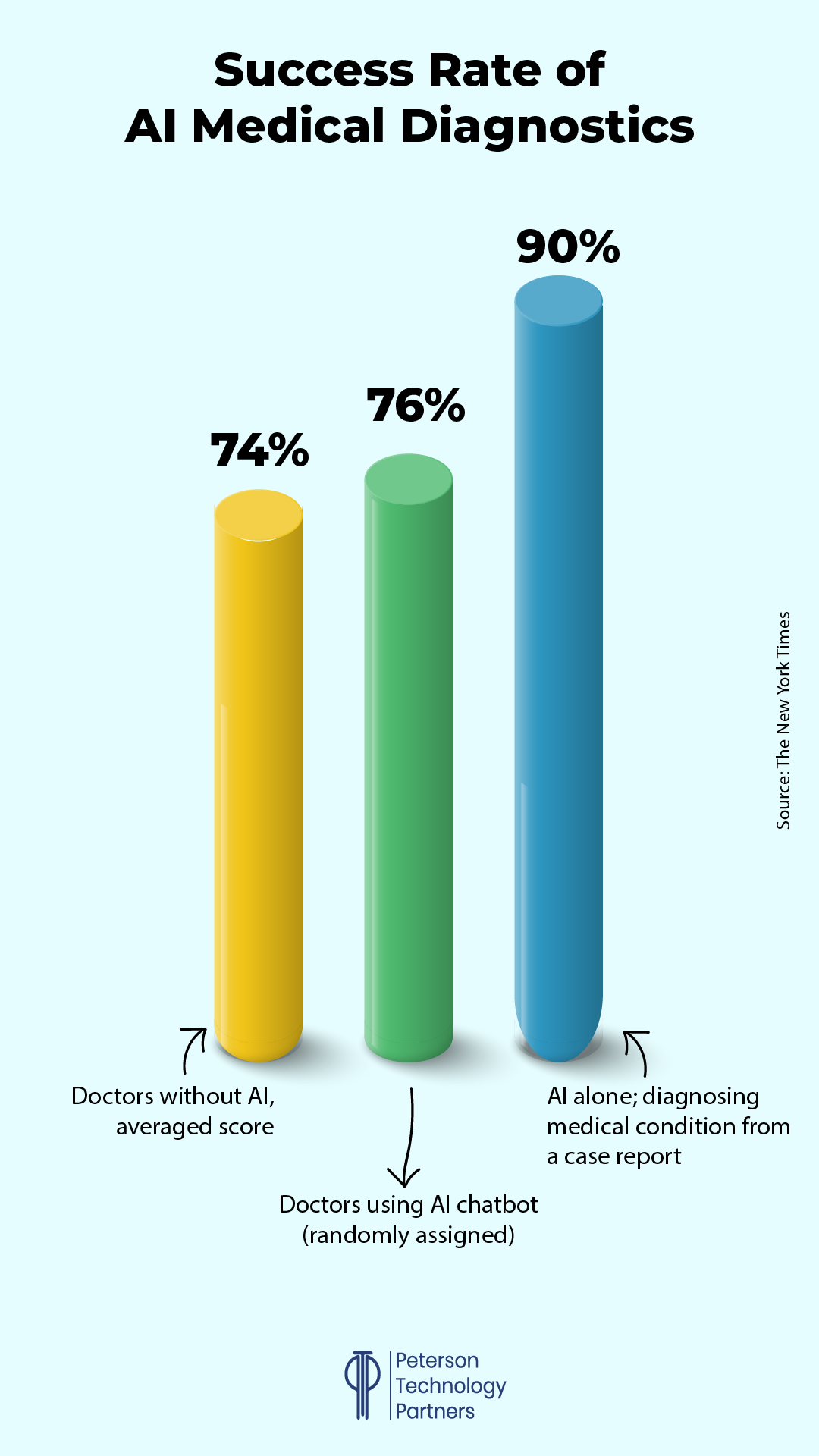

A study published in late October 2024 in the journal JAMA Network Open (and reported on in the New York Times) worked with 50 doctors (residents and attending physicians at various American hospital systems) for an analysis on the impact of AI in diagnosing illnesses.

And its findings made news because they defied expectations.

With some randomly assigned teams given ChatGPT 4 to aid in diagnosis, the expectation was that AI-assistance would definitely help doctors diagnose issues.

But surprisingly, the doctors with ChatGPT did only slightly better than those without (76% to 74% success rate).

Even more striking: ChatGPT working alone from case reports far outperformed both, with a 90% success rate.

How is it possible that medical professionals working with the diagnosis tools did worse than the tools alone?

Instead of thriving as co-intelligence that extends the doctors’ ability, researchers believe many of the doctors failed to take advantage of AI insights in part because of a fixation on their own knowledge and experience.

In other words, the people were overconfident.

A doctor’s specific mix of education, instinct, and insight is famously difficult to quantify, despite numerous attempts nearly dating back to the dawn of the computer. And yet LLM systems, with their ability to process extensive case studies, offer something new. Rather than understanding in any sense, their ability to predict language, and by extension diagnoses, is a means of using far greater datasets than previously possible.

AI tools like ChatGPT cannot come close to replacing doctors themselves—even reasoning models don’t yet think and cannot understand the real world and the unique combination of factors involved in medicine—and yet, studies like this are providing results that have stunned even the researchers behind them.

Beyond Diagnosis: Other AI-Powered Healthcare Solutions

But the primary benefit isn’t coming from diagnosis yet so much as an array of other AI healthcare applications.

The most effective use cases lighten the burden caused by volume, administrative, and billing needs, and the financial promise of these, even with the kinks of adoption still being worked out, are already impressive.

Let’s look at some specific examples of benefits we’re seeing put into practice through medical AI automation:

Medical Transcription AI

Nurses and doctors are often drowning in paperwork, spending some two hours on documentation for every hour of patient care. There’s a rush to address this area, with AI-powered tools like Microsoft’s DAX Copilot transcribing doctor-patient conversations in real time. Freed from this basic burden, the goal is to allow more time for things like examination and direct engagement in general.

The largest provider of electronic health records in America is Epic Systems, and as reported by The Washington Post, they’re piloting generative AI tools that already transcribe an estimated 2.35 million patient visits and draft some 175,000 communications per month.

And they’ve got scores of other AI products in the pipeline, aiming to process orders from visits and provide reviews of the work from prior shifts.

Startups like Glass Health are also providing diagnostic and plan advice (like the study discussed above and the Blue Cross solution detailed below), as well as developing accessible healthcare advice portals for patient use.

AI in Healthcare Workflow

Hospitals are experimenting with AI solutions for things like fraud detection, access control, and improved workflow to reduce operational bottlenecks.

One common pain point being addressed is shift changes, where drops in information or misconnections can cost lives.

HCA Healthcare is using one example, an AI utility that aids the 60,000 nursing handoffs happening daily at their own facilities. Designed in collaboration with Google Cloud, the system auto-generates reports to aid in these handoffs, with nearly 90% of their participants ranking the tool as an improvement.

AI for Patient Care Improvement

Since COVID, more and more patients have been flooding medical service providers with direct communications on their health concerns, and this has worsened an already congested pipeline.

As in customer service solutions across industries, generative AI solutions are being put into use to help alleviate this demand, drawing from extensive datasets, language training, and affective computing innovations to aid in non-critical areas that can be far more personalized, empathetic, and helpful than prior automation solutions. (See below for some concerns in this area.)

Precision Medicine and the Cutting Edge

Robots like the da Vinci Surgical System are being used to assist in surgeries, enhancing precision and minimizing recovery time. AI is also used to tailor treatment plans, ensuring that patients receive the most effective medications and therapies based on their specific, genetic profiles.

Though it’s a subject vast enough for its own report, one of the earliest AI applications has been pharmaceutical discovery, and we’re seeing increasing breakthroughs in research, from virtual cell modeling to disease prevention, contributing to Nobel Prize-winning accomplishments already in these early days.

AI is being used to analyze patient data for insights and error reduction (DaVita), identifying connections across research projects (BenchSci), and helping to direct physicians to relevant tests results far more quickly (Dasa).

AI in Healthcare Efficiency

But perhaps most impressive for the bottom line at this stage are they ways AI is being used to support the healthcare functional processes overall—from helping insurers process variable forms to using AI for claims audits to enabling improved access to documentation and communications.

AI-powered scheduling systems optimize appointment booking, minimize wait times, and can preemptively predict many no-shows.

[Check out this article from PTP founder and CEO Nick Shah that details an AI automation solution to help insurers process claims.]

As profiled in the Harvard Business Review, Blue Cross Blue Shield of Michigan (BCBSM) has worked to transform itself with AI to manage regulatory and service shifts causing extensive increases in complexity. Serving more than five million members (with more than 37,000 providers), the need, and stakes, were high.

Some of their most successful initiatives include:

BenefitsGPT: An internal AI tool of a kind we’re see across industries, it allows queries of unstructured data like plan details, coverage, and exclusions, aggregated across departments. This gives benefit analysts fast access and enables the millions of plan-holders much improved customer service. BCBSM has seen this reduce call times and will improve the time it takes to configure their benefits systems overall.

ContractsGPT: BCBSM has thousands of healthcare vendors and providers with their own unique contracts, and this system allows suppliers to query this literature to find the information they need quickly (such as terms and pricing). It also helps identify overlaps across contracts and suggests improvements and updates.

SecureGPT: Built to addressing data privacy concerns, this system offers role-based access to LLMs to limit potential queries, keeps employee exchanges with the AI on topic, and prevents the sharing of unethical outputs.

It logs all interactions to aid with audits and scrubs private data before queries reach public systems. This has allowed them to maintain compliance with regulations like the Affordable Care Act and HIPAA.

Medical AI Risks and Challenges

This all sounds awfully good. In fact, at this stage, you may be thinking it’s too good to be true.

With medicine being a traditionally more conservative field grounded in the necessity of precedent and proof, the speed of AI adoption in medicine can be more than a little frightening.

This an area where hallucinations at current rates, evidenced in studies like one finding ChatGPT gave “inappropriate” answers 20% of the time (as reported in The Washington Post), may simply be unacceptable for many use cases.

Most of the direct medical uses still don’t require FDA approval as they’re functioning as assistants, rather than providing decisions, with doctors expected to check all advice that’s given. As Adam Rodman, AI researcher and doctor at Beth Israel Deaconess Medical Center, told the Washington Post’s Geoffrey A. Fowler:

“I do think this is one of those promising technologies, but it’s just not there yet… I’m worried that we’re just going to further degrade what we do by putting hallucinated ‘AI slop’ into high-stakes patient care.”

For this reason, outside of testing and experimentation, the best AI applications are still those that are getting double-checked, or where the possibility of failure is not critical (or, as with many claims processing cases, already exists at very high rates).

Some of the other concerns here include:

1. AI Model Bias: Studies have shown chatbots can magnify the biases of medical professionals, as well as create their own. This has been evidenced in examples in which AI output propagates sexist and racist myths (such as that Black patients can tolerate more pain). Brookings studies warn that representation discrepancies in source data can also lead to under-diagnosis that reflect this.

2. Privacy and Security: All industries implementing AI solutions must safeguard customer and employee data, though few are as heavily regulated, where data breaches can expose people to real-world harm. As AI solutions continue to be brought online at a breakneck pace, organizations need to follow approaches like BCBSM to ensure the wrong data isn’t shared publicly, and that solutions don’t increase their exposure to cyberattacks.

3. Overreliance: One common concern about using AI in healthcare is that, as it becomes increasingly effective, doctors may rely on it too much, failing to do their own due diligence at traditional levels. Over time, there are concerns this could reduce our ability to diagnose new and unique threats which extensive case data to draw from.

4. Accountability: And if an AI system is used and not properly checked (by regulatory bodies or doctors making use of them), who’s to blame? With the cost of failure being incredibly high, such fights might fall into a gap between solution developers and the facilities make use of them, as the legal field hurries to catch up to AI.

What This Has to Do with Us

So what’s PTP’s role in all of this?

As an accomplished tech recruiting and consulting firm with over two decades of experience, we provide essential staffing with the experience to enact and improve AI transitions, as well as directly offer our own services.

We have experience helping organizations use AI in scheduling for healthcare needs, as an example. By automating regular processes including dialing and fielding of queries, making confirmations, and rescheduling, companies have been able to free up precious human resources that can then be applied to providing better quality of care.

When trying to balance medical AI benefits and challenges, many considerations are the same as in other industries: The need to implement solutions that keep humans in the loop, with results that get tracked and verified, where failure and experimentation are still acceptable as it gets underway, and where AI’s incredible ability to aid with scaling addresses pain points.

But there are also unique concerns in this arena of real physical impact.

As we move from the experimental to the pragmatic, AI is already making the process of administering care faster, more accurate, and more accessible. But there’s still a long way to go, both with the solutions themselves, and in training medical professionals with skills to enable AI’s efficient, safe, and effective use.

References

A.I. Chatbots Defeated Doctors at Diagnosing Illness, The New York Times

Risks and remedies for artificial intelligence in health care, Brookings

Should you trust an AI-assisted doctor? I visited one to see., The Washington Post

How One Major Healthcare Firm Became the Leader in Innovative AI Use, Harvard Business Review