Are you ready for AGI?

Ready for systems that can do generally what most people can do, remotely over computers?

Is that even AGI? And what does it mean to be ready? And is it really close enough to care?

It was a little more than a year ago when artificial general intelligence (AGI) fever first seemed to sweep through the tech world. Articles, videos, and podcasts were everywhere mentioning the singularity, a theoretical point at which technology will surpass human capacity, irreversibly.

There was a flurry of discussions about putting the brakes on development, on whether open-source AI could be safe enough, and on who should be trusted to safely guide its development and regulation.

[The PTP Report wrote one of our own—check it out here for an overview of the concept of AGI and how it might come about.]

So why are we talking about it again?

In a recent discussion with Y Combinator’s Garry Tan, Sam Altman acknowledged that he believed AGI may come in 2025, and, going even further, that superintelligence, or ASI, is maybe “thousands of days away.”

He pointed to reasoning successes in a narrow way with OpenAI’s o1, and that he believed compounding that with their other developments was moving far and fast and not showing limits yet.

Across from this, on the Hard Fork podcast, Miles Brundage, the former Senior Advisor on AGI Readiness at OpenAI (he’d been at the company for six years before leaving in late 2024 to work on policy research and advocacy for AGI-readiness) said he does not believe that OpenAI or any frontier AI lab nor society at large is truly ready for AGI.

But he seemed to agree with his former boss on a timeline, saying “in the next few years” there will be systems (whether called AGI or not) that can do basically anything we can do on a computer.

Also that these developments will be extremely disruptive, and that people need to be prepared.

In this week’s PTP Report, we return to AGI, looking at the predictions of Sam Altman and others, considering what it is, and what impact such systems may have on work in the near term and the world as we know it.

AGI vs AI: What Is It and Why It Matters

To recap, artificial general intelligence or AGI is a hypothetical, yet-to-be-realized stage of development in AI. Many books and research studies have been conducted not only on defining it, but also on how to test to verify that it’s actually here.

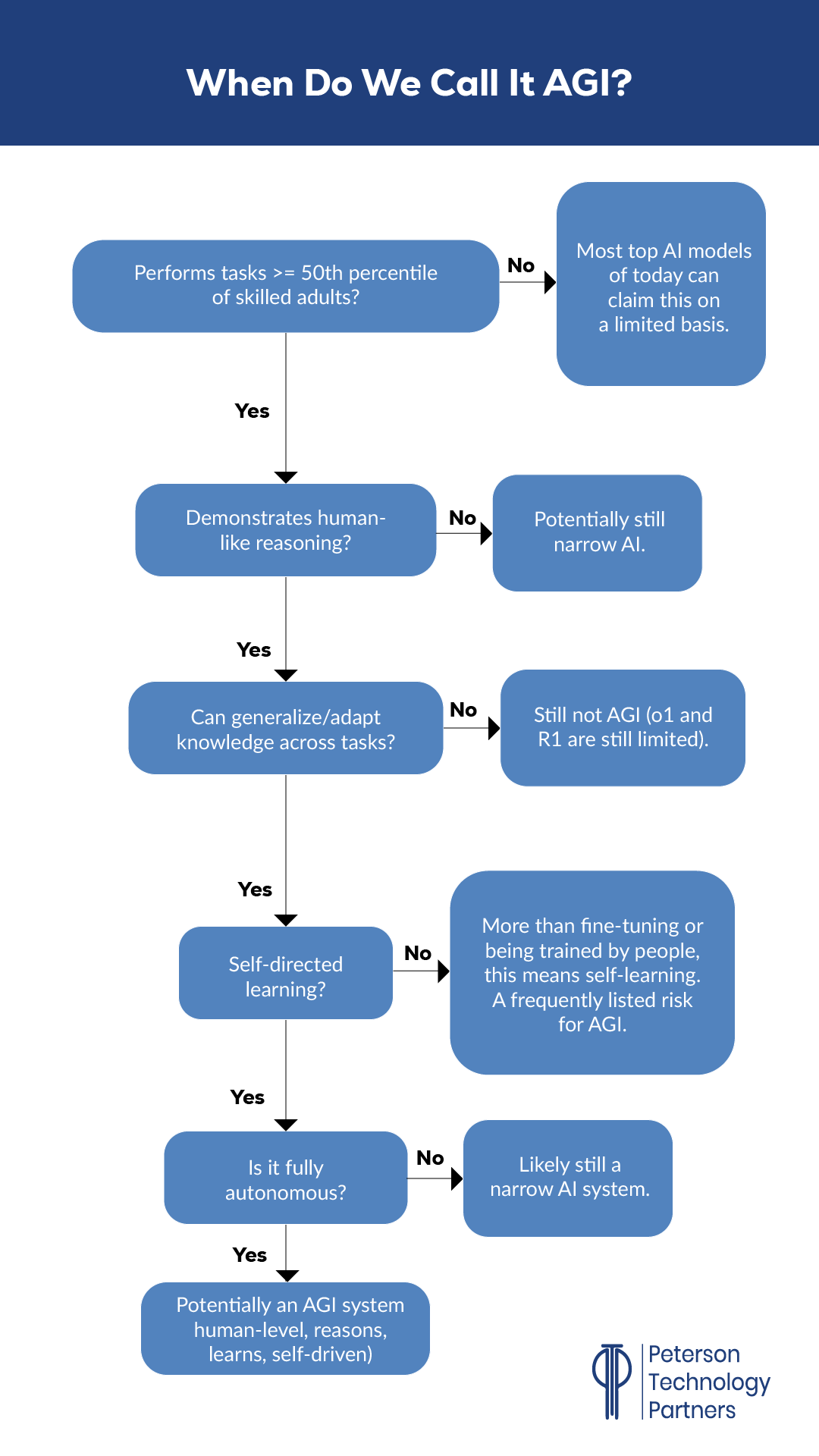

And although there are still differences of opinion (we’ll get to a few), generally it’s agreed a system should be able to pass these steps:

An AGI should be able to do tasks as most people can do them, reason and apply knowledge across domains, learn and teach itself new things, and act on its own.

In short, it should behave like an employee.

Assessing When It’s Here

Among the means proposed to test for how we know for sure when something has reached an AGI level:

- Turing Test: This tests if a human can reliably distinguish between a person and a machine during a conversation. Some argue we’ve already passed this one (a Stanford study in February 2024 claimed GPT-4 did), but others disagree (a McKinsey report from March 2024 finds none are yet close).

- Human Comp Measures: There are many proposed tests aimed using some complex (and varied) task many people do and know well, such as: enroll in a university and fully get a degree, conduct an occupation at a level comparable to current workers (on a daily basis), assemble items provided in a box with instructions, or enter a home, find the kitchen, and make a pot a coffee (this credited to Steve Wozniak). Some of these would require robotics, for fine motor skills and movement. Critical in most of these tests is subjecting the system to something it’s not experienced, and forcing it to adapt as people do every day.

- Modern Turing Test: Proposed by Microsoft AI CEO Mustafa Suleyman, this test would require an AI to handle this prompt: “Go make $1 million on a retail web platform in a few months with just a $100,000 investment.” This would need to cross a lot of domains, R&D, interface with manufacturing and logistics, handle contracts, and market. As he wrote in an essay in the MIT Technology Review: “It would need, in short, to tie together a series of complex real-world goals with minimal oversight.”

- Cognitive-Science Based Testing: Many researchers argue we should use tests akin to human intelligence assessments, covering knowledge and skills, adaptability, social skills, and more, to get at the wide range of human capacities we consider part of intellect. That is, test the machine intelligence as humans are, in any number of ways.

Already here we see wide discrepancies, and one of the largest is whether AGI requires robotics for movement and the capacity to manipulate things in the real world, or can exist as remote employees do, through a computer.

Regardless, even for the test to make a million dollars in a few months with $100k in seed money, Mustafa Suleyman wrote in 2023 that he believed such capacity may only be a few years away.

That could mean this year.

Artificial Superintelligence (ASI)

The level of capacity required to be AGI is highly variable (from making coffee to a million dollars) and this is a point also made by Suleyman in his essay. He advocates for further divisions, proposing artificial capable intelligence (ACI) much as many have already broken out artificial superintelligence (ASI).

The latter refers to AI systems that far surpass human intelligence, and many researchers believe one AGI is achieved, it would not be far behind.

OpenAI safety researcher Stephen McAleer tweeted in January (3rd, 12th, and the 9th below):

“I kinda miss doing AI research back when we didn’t know how to make superintelligence.”

And:

“Controlling superintelligence is a short-term research agenda”

And:

“I will say this: many researchers at frontier labs are taking the prospect of short timelines very seriously, and virtually nobody outside the labs is talking enough about the safety implications.”

Whether or not this measure of superintelligence is true ASI, he shares a concern being voiced by many in the industry.

OpenAI and Microsoft: Motivation for AGI 2025

Another thing complicating the definition is of course business.

When Sam Altman remarked late last year that AGI might be possible in 2025, there was immediate and widely reported speculation he had a highly practical motivation in potentially moving up the timeline.

Reportedly, Microsoft will lose current access to OpenAI’s technology when the former startup succeeds at achieving AGI.

And as discussed here, that could mean many things, incentivizing Altman to claim it’s soon.

Potentially debunking this are newer reports that documents leaked in December show the companies have a far more specific definition already set.

This “AGI condition” supposedly comes about when AI is developed that can generate $100 billion in profits. If this is true, they are nowhere near this, making it unlikely to have motivated Altman’s comments.

Regardless, the point here is that it’s impossible to separate the financial realities from even the definition of AGI, just as it is with many benchmarks and even claims of what constitutes agentic AI systems.

The Timeline Shifts

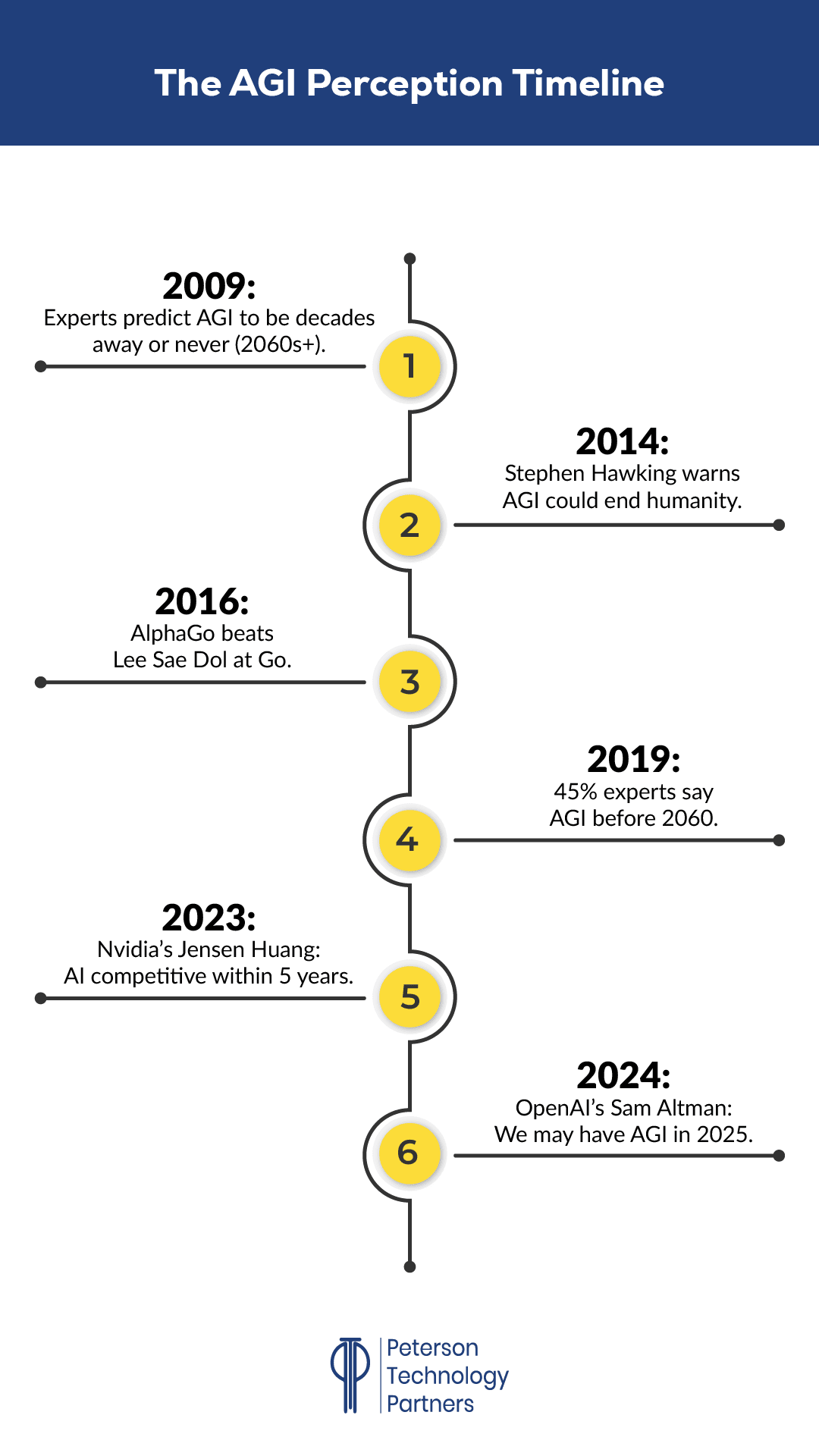

Obviously it makes sense for a technology with such a loose and shifting definition to also see wildly varied predictions of when we will reach it, and that’s certainly been visible over time:

We’ve already talked about Sam Altman and Mustafa Suleyman. The same prediction range has also come from Google DeepMind’s Demis Hassabis and Nvidia’s Jensen Huang.

Geoffrey Hinton, one of the “godfathers of artificial intelligence” who was jointly awarded the Nobel Prize in Physics in 2024 for his work in AI development, also moved his own timeline up, telling The New York Times:

“But most people thought it was way off. And I thought it was way off. I thought it was 30 to 50 years or even longer away. Obviously, I no longer think that.”

He may have also adjusted other numbers, lifting his view on the likelihood AI superintelligence could wipe us out. He told the BBC in December 2024 that he believed there was a 10% to 20% chance.

“I like to think of it as: imagine yourself and a three-year-old. We’ll be the three-year-olds.”

Another of the so-called “godfathers of AI,” Meta’s Chief AI Scientist Yann LeCun, disagrees, saying he believes AI may actually provide the means to save humanity.

Long a skeptic of AGI, LeCun’s tune has perhaps changed slightly, as he acknowledged current progress could mean AGI in less than a decade, but that it will require a significant re-thinking, or more than just scaled LLMs or combinations of LLMs and current reasoning models (like OpenAI’s o3).

AGI Acceleration Risks

We opened discussing former OpenAI AGI Readiness Senior Advisor Miles Brundage.

He’s cautioned that the big AI companies are falling behind in safety due to the demands of competition, by not solving even the problems they know about, let alone the ones that are still unknown. (He cites hallucination as an example.) In a technological gold rush that could be greater than any before it, safety may have already become a casualty.

Still, he argued it’s not about putting on the brakes, but rather installing brakes, in the event they’re needed, whereas now there are none.

There’s no dispute at the frontier labs that there’s been significant progress towards AGI, and that the progress is ongoing (even among people with no incentive to hype accomplishments).

Brundage recommended three things in terms of preparing for AGI:

- Try the new developments ASAP. If you haven’t used the latest version of releases from OpenAI, Anthropic, Meta, or DeepSeek, for example, you’re already behind.

- Think about what AGI or even increasingly broad, capable AI might mean for your career or business. Should you be thinking about a more future-proof path? Will your offerings stand up in a world where some percentage of remote workers may be AI?

- Protect yourself, your organization, and loved ones from deepfakes. There’s even skepticism from many here, as instances of fraud and cybercrime using this technology are rising fast.

Governments around the world need AI readiness preparation, with sufficient funding. And one of the main issues may be convincing people that AI at this level is a real thing, and not just hype or science fiction.

Managing an AI Talent Shortage in 2025

How big will the step be between AGI and AI agents of the kind we’re seeing now, that can browse the web and do some tasks for you autonomously, make calls to clients and field them, schedule, survey, market, and debug code?

It’s impossible to know for sure, but each step will see further automation past roles in customer service and data entry. Coding, finance, and legal support roles are already seeing increasing automation. We wrote previously about implementations in the healthcare field, for example.

In all these cases, organizations need AI technical professionals to pair with subject matter experts. In this race, the best talent goes fast, and often at high price. While there’s a great interest in AI and a growing awareness of its essential role in the future across businesses, the supply and demand of quality talent is also shifting fast.

At PTP we have our own pipeline of AI and ML talent, whether your needs are onsite or off, and more than 27 years of experience pairing great people in tech with organizations in need.

As you look to keep pace with the next steps in AI, consider PTP for your talent needs.

Conclusion

AGI is a variable term that even pioneers in the field struggle to define.

But the truth behind it—that we will soon have AI systems capable of providing human-like talent remotely through computing services—are striking and still not being fully anticipated by many used to slower-moving innovations.

Consider this: even the Chinese DeepSeek shockwave that took more than a trillion dollars off the market cap of the big tech companies hasn’t slowed down investment, with the big four AI companies committing 46% more to their AI budgets than last year.

There are serious issues to be resolved before we have AGI, and none more dramatic than the alternate takes of Hinton and LeCun: will AI doom us or save us?

Perhaps neither. But one thing is for sure: this impact is going to be significant.

References

How To Build The Future: Sam Altman, Y Combinator video series

Billionaire Game Theory + We Are Not Ready for A.G.I. + Election Betting Markets Get Weird, Hard Fork Podcast on The New York Times

Levels of AGI for Operationalizing Progress on the Path to AGI, arXiv:2311.02462 [cs.AI]

Study finds ChatGPT’s latest bot behaves like humans, only better, Stanford University

Mustafa Suleyman: My new Turing test would see if AI can make $1 million, MIT Technology Review

Microsoft and OpenAI Wrangle Over Terms of Their Blockbuster Partnership, The Information

Google DeepMind CEO: AGI is Coming ‘in a Few Years’, AI Business

Godfather of AI’ shortens odds of the technology wiping out humanity over next 30 years, The Guardian

Big Tech set to invest $325 billion this year as hefty AI bills come under scrutiny, Yahoo! Finance