Of all the ways you may have imagined AI impacting cybersecurity, I doubt this was one of them: a company heavily vets and then hires a qualified remote worker, and they even do quality work, only they’re not who they say they are.

They’re also not where they say they are, with sources like Wired reporting the money they’re being paid is supporting programs like the North Korean nuclear program and additional cybercrime.

Implausible as this sounds, it’s real, and the threat is growing.

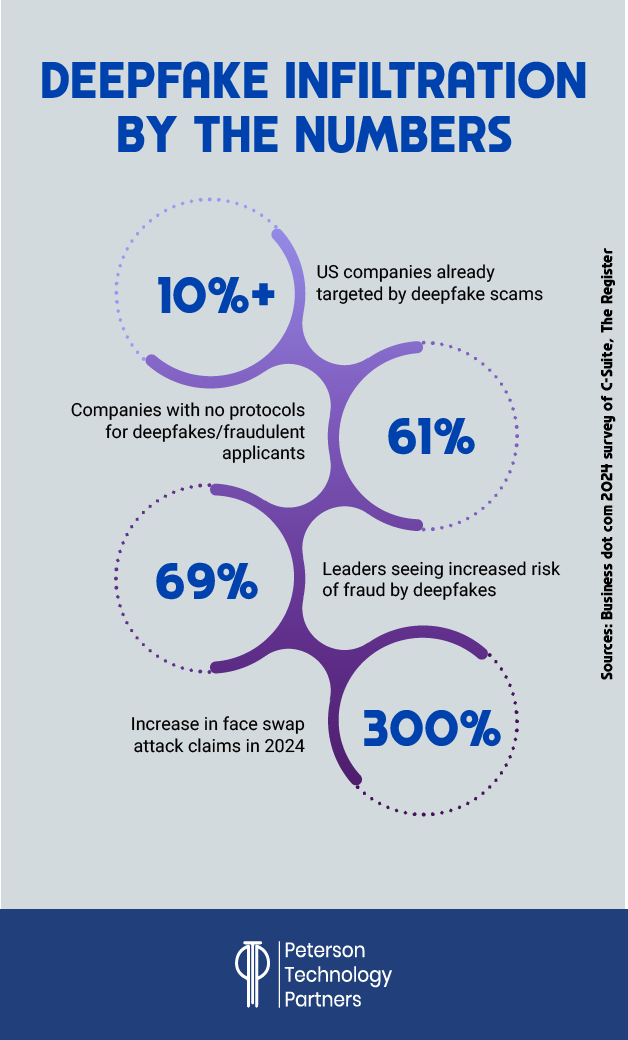

Enabled by a unique combination of remote work and deepfake AI technology, these scams are surprisingly prevalent, hard to detect, and can result in lingering surveillance, ransomware attacks, data exfiltration, and code theft.

In today’s PTP Report, we look at how this scam works, consider real examples, and profile ways companies can fight back.

In an age where AI helps bridge language and cultural barriers, generates great audio, and is getting increasingly successful at swapping faces in streaming video, how well do you know the people you’re working with?

The Emergence of Deepfake AI Employment Scams

The FBI has been warning of fake job listings for years, but the emergence of widespread fake applicants comes as more of a surprise.

It’s not easy to fake your identity through a multi-stage interview process, with references, past work experience, and college degrees, and before COVID and the rise of remote work, this was incredibly difficult to pull off in any sustained way.

Warnings of the use of deepfake technology for this began back in 2022, though at the time it’s believed most were still being caught. The MIT Media Lab made deepfake detection cheat sheets and websites (their DetectDeepfakes project just ended in January of this year), and companies began to market deepfake detection tools.

But AI has advanced significantly since then. The FBI’s more recent warnings indicate that there are likely hundreds of North Korean agents currently working at US companies and thousands that have been hired in recent years. (Just one case profiled below demonstrates success at more than 300.)

What is AI-Generated Employee Fraud?

The basic concept is simple—someone gets hired at a company using a false name and false credentials. These include stolen IDs, fake fronts for interviewing, real addresses for routing mail, generative AI to aid in communications, and even the use of deepfake video and audio for necessary interactions.

They can also:

- Use US internet connections by laundering US company laptops through domestic facilitators

- Take advantage of remote desktop technology to run machines from abroad

- Ship machines from American addresses overseas

- Utilize US-based front businesses, for credentials and even as fake recruiting firms

- Use US-based financial accounts for payments and transactions

- Use real, stolen identities for hiring and even to submit taxes

- Use virtual camera software to replace live video feeds with injected content

New Among Remote Work Security Risks

Remote work has been used effectively for a long time and in many positions is more successful and cost-efficient than on-site, but that doesn’t change the fact that it brings certain risks to the table.

With an excuse not to meet in-person, it’s harder to prove someone on the other end of a call is the person they say they are. But this can also go a step further.

How can you prove that the person you interviewed is the same person who is now working the job?

While remote work opens the door to much wider talent pools, it also makes this scam possible.

Deepfake Scams in Action

Let’s look at specific infiltrations that have come to light in just the last two years.

C-Suite Fraud Cases

While not always cases of fraudulent workers being hired, more than half of companies have been targeted with audio and video deepfake attacks according to cybersecurity firm Regula. And some of the most high-profile cybersecurity threats from deepfakes have involved executives.

Last October, the CEO of cybersecurity firm Wiz appeared to send a voice message to employees requesting login credentials, but this was a deepfake, which employees sniffed out.

Though well-made, it appeared to use speeches Wiz CEO Assaf Rappaport made publicly, where luckily, he affects mannerisms to overcome stage fright which differ from the way he talks in person.

Last May, the world’s largest advertising company WPP suffered a similar attack, when their CEO appeared to create and even attend a Microsoft Teams meeting, asking for funds and personal information.

But this attack, too, was unsuccessful, when the team guessed they were dealing with a deepfake and not their actual CEO.

A successful attack in early 2024 saw an employee at a multinational financial firm tricked into paying out more than $25 million to the attackers.

Though the initial approaches seemed like phishing, the employee still attended a video call where all the other attendees were AI deepfakes, including the firm’s UK-based CFO.

They were so convincing that the employee overcame doubts and made the transfer. The mistake was only discovered when they later checked in with the home office.

Hong Kong police, in investigating this deepfake cybercrime, confirmed they’ve become convincing enough to even fool facial recognition software.

A Staple of North Korean Cybercrime

Hackers known as the Lazarus Group and backed by the North Korean government recently pulled off the biggest crypto heist in history ($1.5 billion), by compromising free storage and luring the CEO to approve a transfer.

And while this didn’t involve deepfakes or fraudulent employees, it shows the sophistication of North Korean hacking groups aggressively targeting businesses around the world.

There have been numerous cases of North Korean operatives working undercover at US companies, and one of the largest exposures involved Christina Marie Chapman, an Arizona woman, who ran a laptop farm to enable foreign IT workers.

According to court documents, she helped criminals steal more than 70 US identities, which were then used to get remote IT jobs at more than 300 US firms.

These included Fortune 500 companies, television networks, a Silicon Valley technology company, and an aerospace and defense manufacturer, and much of the income was even falsely reported to the IRS and Social Security Administration.

It’s estimated by the US Justice Department that these scams have brought North Korea more than $88 million through seemingly legitimate work done by fraudulent employees alone.

A security engineer at a firm that uses AI to find vulnerabilities even nearly hired a fake.

As Dawid Moczadło told the Register’s Jessica Lyons:

“We spent and lost more than five hours on him… And the surprising thing was, he was actually good. I kind of wanted to hire him because his responses were good; he was able to answer all of our questions.”

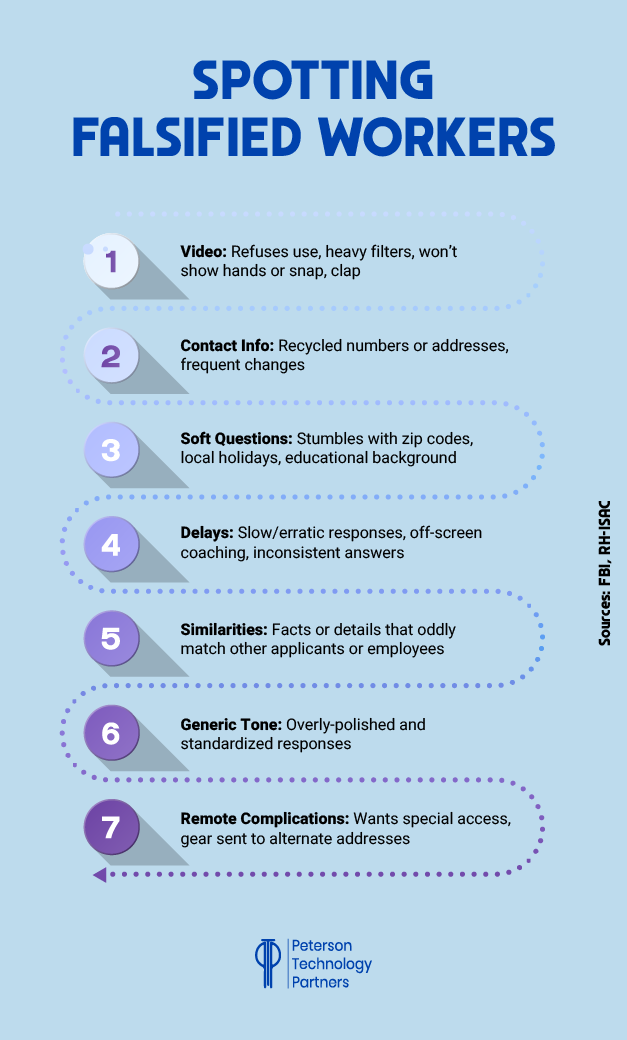

The tipoff in this case came from the final video interview stage, where the image was glitchy, and the movement seemed irregular.

And two months after this rejection, they got another one. This time, they repeatedly asked the candidate to wave a hand in front of the camera, but the interviewee refused.

AI-Powered Identity Theft at Cybersecurity Firms

Some of the most desired targets appear to be cybersecurity firms themselves.

The firm KnowBe4 actually hired one, after passing background checks, getting through four different video interviews, and having a photo that matched identification. They later learned this was stolen, and that the photo was adapted by AI.

The discovery was only made when the employee’s machine was found downloading malware. After sharing data with Mandiant and working with the FBI, they confirmed this worker was a North Korean operative.

Detecting and Defending Against AI Fraud in Remote Work

While many of these examples have been of workers who were either caught before getting hired or did their jobs, the FBI warned in January that businesses have also suffered far more damaging repercussions, including:

- After discovery, extorting employers with ransomware

- Releasing proprietary code and large-scale theft of codebases

- Lurking in systems, such as by harvesting credentials and session cookies to initiate work sessions on additional devices

To prevent this, companies must improve their applicant vetting, but they also need to secure and monitor their data and systems traffic.

To spot potential fraudsters, look out for the following:

As KnowBe4 and others learned, background checks are insufficient to catch this scam.

Vet references carefully (beyond email), and get candidates on camera multiple times, with required interaction involving hands and even showing areas beyond the frame where possible.

These adjustments on the fly make injected AI video far harder to pull off without glitches or visible flaws.

Where possible, limit the ability for workers to install remote desktop applications, disable local admin accounts, and continually monitor your network traffic.

We’ve written before about Zero Trust approaches, which can assist not only in identifying suspicious behavior but also containing it, so even fraudulent workers would be limited in their ability to move laterally and lurk in systems.

The FBI cybersecurity recommendations include monitoring network logs and browser session activity to keep an eye out for exfiltration that may be moving data to shared drives or cloud accounts. Also watch endpoints for multiple video calls being allowed to occur simultaneously.

As always, educating your team may be the best defense. As we’ve seen above, people willing and able to act on suspicions have prevented attacks, while those who haven’t have cost companies dearly.

Partner with Vetted Staffing Firms like PTP

Like many hiring firms, PTP has had its own experience receiving fake applicants.

With more than 27 years of experience vetting tech professionals, we’ve developed a deep, rigorous process that implements the suggestions discussed here.

Our CIO-led screening process involves getting a deeper sense for applicants, and our recruiters know how to act on their instincts when things feel “off.”

There are several quality partners for businesses to trust, and regardless of who you choose, partnering with experienced hiring professionals can spare you the risk and headache of trying to catch the latest deepfake tricks being used by applicants.

Just be sure that whoever you trust exercises sufficient diligence as tactics grow more and more sophisticated.

Conclusion

Five years ago, it may have sounded absurd to suggest companies would face a real risk of not only hiring but continuing to work with fraudsters appearing under an AI-cloaked guise. Chatting with them, going to meetings together, and working side-by-side without having a clue.

Sadly, this is now reality. After years of pushing this scam with limited success, the tools and methods have reached the stage where they’re fooling even polished HR teams (and nearly security pros).

The next step in this chain of hoaxes may be entirely artificial applicants, but until then, stay vigilant, and when in doubt, ask applicants to snap their fingers, get up from the camera and sit back down, or show the room around them.

References

Detect DeepFakes: How to counteract misinformation created by AI, Affective Computing Project, MIT

Alerts: I-051624-PSA (May 16, 2024) and I-012325-PSA (January 23, 2025), Federal Bureau of Investigation

It’s bad enough we have to turn on cams for meetings, now the person staring at you may be an AI deepfake, The Register

Finance worker pays out $25 million after video call with deepfake ‘chief financial officer’, CNN

Hackers Targeted a $12 Billion Cybersecurity Company With a Deepfake of Its CEO. Here’s Why Small Details Made It Unsuccessful., Entrepreneur

How a North Korean Fake IT Worker Tried to Infiltrate Us, KnowBe4 Security Awareness Training Blog

A US Company Enabled a North Korean Scam That Raised Money for WMDs, Wired

Arizona laptop farmer pleads guilty for funneling $17M to Kim Jong Un and I’m a security expert, and I almost fell for a North Korea-style deepfake job applicant …Twice , The Register

FAQs

How do deepfake job applicants bypass background checks?

The truth is many companies only rely on automated or surface-level background checks. Even with identities purchased from the dark web, you can often pass basic screenings that match names to public records. The more sophisticated schemes go further, using AI to create synthetic identities—mixing real with fake—which also can fool systems that lack sufficient cross-checking. Add in a lack of having to produce physical documentation, and these scams become much harder to detect.

Are companies legally liable if they accidentally hire a deepfake employee from a sanctioned country?

Yes—and penalties can be severe. Hiring workers from countries under sanctions like North Korea or Iran can trigger violations for US companies. And even if it was unintentional, you can still be found responsible. The Arizona laptop case demonstrates how this scam can result in criminal charges and reputational harm.

Can AI help detect deepfake applicants, or is this just a human judgment call?

Yes, AI can definitely aid in catching deepfakes, but real-time detection is harder, and knowing the full extent of its efficacy for live video is also challenging. Some providers claim as high as 90% effectiveness, but AI injected into low-resolution video is harder to catch. As noted in the article, the best check at present may be trained humans, and the near-term future will most certainly mean a combination of AI defenses and trained humans.