Software quality issues cost the US trillions of dollars a year, according to the Consortium for IT Software Quality (CISQ). Even in data that’s two years old, the number was put at $2.4 trillion, up from $2 trillion in 2020. Add in the following from Forbes:

- Catching bugs late in development can make their correction 100 times more expensive

- As much as 50% of development budgets go to addressing quality issues

Quality Assurance (QA) is an essential part of any software development lifecycle, but it often finds itself tasked with nearly impossible objectives: Cover as much as possible, as continuously as possible, at the lowest cost possible.

We recently looked at how AI is being integrated into testing to help, replacing more manual solutions by harnessing AI for generating test scripts and making test data, automating repetitive tasks by taking advantage of tools like Github Copilot and Playwright.

Today we revisit this area, looking more specifically at how AI agents are being used in testing.

With agents rapidly coming online at varying scales, across industries, and use cases, we consider how they are applied as part of AI-powered QA frameworks to improve coverage and code quality, while also reducing the burden on developers.

Agents vs AI-Powered Software Testing

AI jargon may be moving faster than even the technology itself, and no term right now is more variably applied as AI agent.

We’ve considered what it means in The PTP Report numerous times, but as it’s a moving target, we mean here an AI-powered system that can perform tasks autonomously or achieve ends without being driven directly by people (or used like a tool).

There’s a big range even within this, from AI systems using provided utilities and workflows, step by step, to those that build solutions and execute them autonomously, working off only high-level guidance, within certain permissions.

Specific to AI-driven QA solutions, agents enable combining multiple steps with the flexibility to adapt, make their own adjustments, and repeat as necessary.

And while there is great value in using AI in software quality assurance for generating cases, test scripts, quality test data, and reporting, these use cases independently aren’t agentic.

By taking advantage of agent offerings like OpenAI’s Operator, Claude Anthropic’s Model Context Protocol (MCP), or the open source AutoGPT, you can truly automate more of the QA process than ever before.

This can mean automation at any of the following levels:

1. Agentic Workflow Handling: Developers provide everything needed, and the AI system chooses when to execute. This is probably the lowest form of what you’d consider agentic, as it’s simply working through a series of steps in order, making necessary adjustments with provided utilities.

2. Agentic Iteration and Adjustment: More useful and complex, this kind of agentic testing builds on #1 by measuring against results to repeat and refine as necessary. It has the ability to self-heal, by revising and refining scripts.

3. Agentic Execution: Working from high-level instructions, agent solutions at this level write and execute code, making decisions on what tools to use, and when, and how to best achieve their provided objectives, within allowed parameters.

There are abundant examples of the simplest forms of this (#1 and 2), such as by using HuggingFace’s Smolagent framework with Python (you can check out their documentation here), to work with Selenium or Playwright for for UI or API testing.

In a very short time you can be up and running with agentic AI that takes higher-level testing requirements (such as elements of a webpage to test, or the kinds of data to update or get from API), then searches online for needed information, and writes code necessary to run these tests (including getting needed libraries as allowed). It can then execute the tests, even generating realistic testing data, document all steps, and retry the process as necessary.

And all using the AI model or models you need.

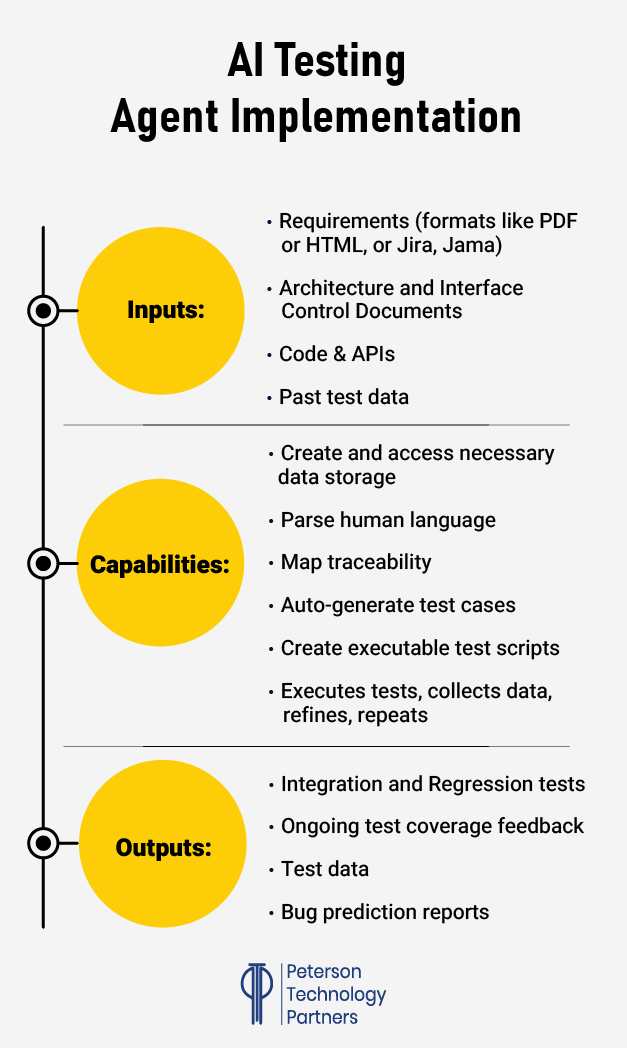

But agentic solutions can also now go well past this, taking in human-writing requirements documentation, determining what is needed to test, generating test scenarios, scripts, and code, executing unit, integration, and regression tests, generating quality testing data, updating and maintaining test suites without human intervention, and generating reporting that can be used to predict future testing faults before they occur.

By embedding such processes in your CI/CD pipelines, it’s already possible to greatly extend coverage in ways not previously possible.

A Look at the Nvidia HEPH AI Testing Framework

One well-documented example of such a robust agentic framework is Nvidia’s Hephaestus (HEPH).

This internal generative AI framework works off provided documentation and code samples to design and implement both integration and unit tests.

This custom agentic solution is one example that automates the entire testing workflow.

Taking advantage of natural language processing (NLP), it handles input documentation in a wide variety of formats to create testing requirements. It then implements these with generated tests in C/C++ that are compiled, executed, and verified, with the coverage data being used to further fine-tune the system and refine the tests.

And as it learns, it fills in missing steps and refines problem areas.

You can take a look at their developer blog here for full details, including example testing, test cases, and outputs.

Agentic AI in Software Testing: Initial Strengths and Weaknesses

With the improved reasoning capacity of current AI models, the capabilities in this area are improving rapidly. But already certain patterns are emerging where it flourishes and where there are still challenges to overcome.

Where It Shines:

Automation of this kind is geared to free up people for more specific or unique asks, and there’s plenty of repetitive or onerous aspects in testing that are extremely well-suited to AI.

Test data creation and maintenance has long been a time drag on QA teams, and generative AI can anonymize and refresh datasets with high quality and speed.

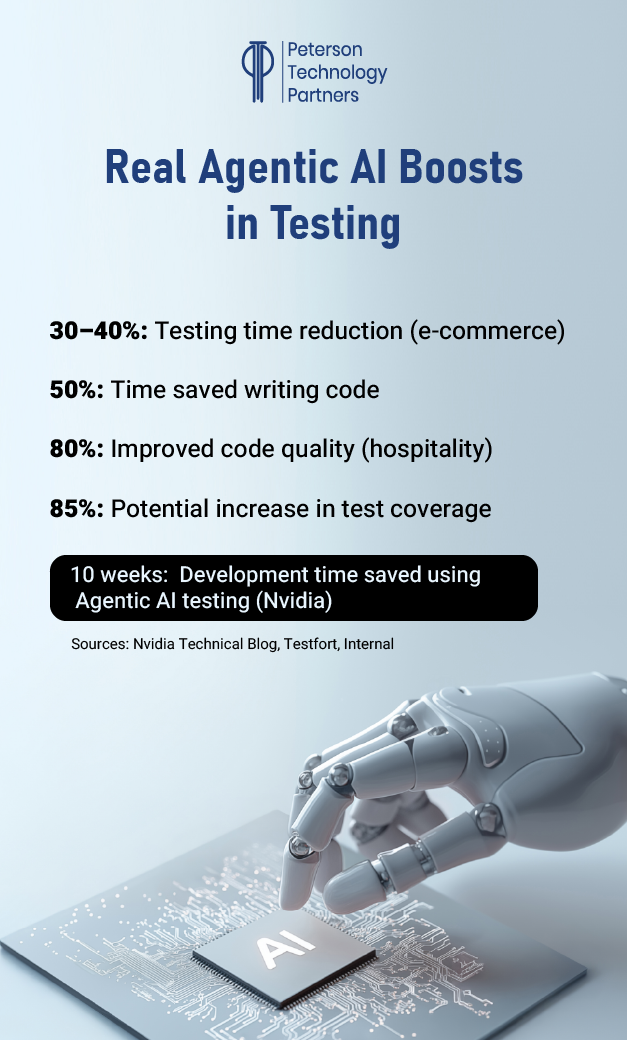

Test creation and maintenance, too, is a repetitive and time-consuming process that is also well suited to even non-agentic systems. Scripts can be self-healing, iterating far faster than is possible for manual testers to revise and refine steps, removing problematic breakdowns. This whole area alone can cut QA time by some 50+%.

Coverage is another area of significant gain. By running 24/7, learning and repeating, automated software testing with AI can more than double what can be tested, helping you find bugs far earlier in the development process.

They also work well in CI/CD workflows, implement version control, and can automate even custom ticketing systems.

Where It Struggles:

As with other systems, more autonomous AI testing agents often provide more value where the workflow is longer and more complex.

AI solutions always introduce the possibility of hallucination, making them unpredictable.

If your workflow is clear and has few needed steps, they may be overkill, removing flexibility and adding unnecessary complexity.

One of the great benefits of agentic AI is that it can adjust dynamically, enabling far more complexity than a switch statement, for example.

But likewise, the less defined (or clear) your requirements are, the less successful automation can be. Where significant interpretation is required, the possibility for mistakes snowballs.

Oversight also remains essential throughout the process, as with all AI-generated code, and especially when integrating with legacy systems or working from existing test cases.

Agentic AI in QA: The Early Numbers

This process is new and changing fast, which makes quality metrics hard to come by. But the early results have been extremely positive.

Nvidia’s HEPH system, for example, has already saved them ten weeks in development time.

AI test automation is improving bug detections by some 25% and reducing QA costs by 30 to 40%, and our own clients are seeing enormous savings, improvements in time to market, and massive improvements in both coverage and code quality.

How PTP Can Help Implement Agentic AI

With more than 27 years in the technical recruiting and consulting business, PTP has a lot of experience with digital transformations of all kinds, and we’ve been very involved with AI from the start.

By taking advantage of nearshore talent and strategically pairing it with onshore teams, businesses are able to implement both cost-saving and quality-increasing improvements at highly reasonable prices.

Nearshore AI QA solutions thrive at implementing test case automation, migrating existing scripts, and providing initial validation, while onshore teams provide the strategy, governance, and overall testing management needed.

And by sharing or having closely aligned time zones, these partnerships offer greater flexibility for resource allocation and scaling, and can accelerate implementation in a safe, controlled way.

They’re lower cost than onshore and can be more easily and closely aligned than offshore, helping you migrate from your current system to a more agentic solution.

Conclusion

AI agents are at the current center of AI hype, being used for a huge variety of things from genAI systems with a human-provided workflow and/or RAG, all the way to reasoning systems that actually choose what, when, and how to execute within their allowed behavior.

And more is on the way. OpenAI made waves in March when they told investors they planned to soon offer agents that cost as much as $20,000 per month for PhD-level work.

But in the realm of AI-driven software validation, we’re already seeing agents improve test automation very rapidly and in a far more affordable fashion.

These innovations make the dream of good requirements mapping to cleanly-executed applications and verified by fully covered and well-documented testing increasingly an attainable goal.

And while such systems won’t remove the need for manual verification and human innovation any time soon, they take on repetitive work with the consistency and at a scale never before possible.

References

Building AI Agents to Automate Software Test Case Creation, Nvidia Developer Technical Blog

The Hidden Cost Of Bad Software Practices: Why Talent And Engineering Standards Matter, Forbes

OpenAI’s Deep Research Agent Is Coming for White-Collar Work, Wired

Tools of Smolagents in-depth guide, Smolagents Blog

Understanding, Testing, Fine-Tuning AI Model with HuggingFace, ExecuteAutomation

FAQs

Will AI Agents replace QA engineers?

Our founder and CEO recently wrote a piece about this which you can read here But in short, no—but they are changing the role. AI agents thrive at handling the repetitive tasks like generating regression tests and testing data, maintaining scripts, running and re-running tests, and identifying clear defects. But engineers are still needed for higher-level strategy, exploratory testing, and compliance.

How do AI agents handle vague or incomplete requirements?

Not as well as we do. But they are improving fast. AI agents thrive with good structure (like Nvidia’s HEPH above using Jama inputs, though they can handle alternatives). When requirements are ambiguous or require interpretation, they’re more likely to lead to incomplete or incorrect testing. Agentic solutions are best when they include interactive options along with pure automation for this reason.

How long does it take to implement an AI testing agent?

There’s no one-size-fits answers for this question. You can be up-and-running with Smolagents example on your own machine in a few hours, even with trial-and-error. But business-level implementation time depends on your tech stack, data and documentation hygiene, current test cases, budget and scale. Many teams roll out AI-powered testing alongside existing systems very quickly (a matter of weeks) as they begin to cut-over. A hybrid model using nearshore can accelerate setup, provide more human oversight, and scale as you need at a more affordable price point.